2 Introducing Differential Geometry

Gravity is geometry. To fully understand this statement, we will need more sophisticated tools and language to describe curved space and, ultimately, curved spacetime. This is the mathematical subject of differential geometry and will be introduced in this section and the next. Armed with these new tools, we will then return to the subject of gravity in Section 4.

Our discussion of differential geometry is not particularly rigorous. We will not prove many big theorems. Furthermore, a number of the statements that we make can be checked straightforwardly but we will often omit this. We will, however, be careful about building up the mathematical structure of curved spaces in the right logical order. As we proceed, we will come across a number of mathematical objects that can live on curved spaces. Many of these are familiar – like vectors, or differential operators – but we’ll see them appear in somewhat unfamiliar guises. The main purpose of this section is to understand what kind of objects can live on curved spaces, and the relationships between them. This will prove useful for both general relativity and other areas of physics.

Moreover, there is a wonderful rigidity to the language of differential geometry. It sometimes feels that any equation that you’re allowed to write down within this rigid structure is more likely than not to be true! This rigidity is going to be of enormous help when we return to discuss theories of gravity in Section 4.

2.1 Manifolds

The stage on which our story will play out is a mathematical object called a manifold. We will give a precise definition below, but for now you should think of a manifold as a curved, -dimensional space. If you zoom in to any patch, the manifold looks like . But, viewed more globally, the manifold may have interesting curvature or topology.

To begin with, our manifold will have very little structure. For example, initially there will be no way to measure distances between points. But as we proceed, we will describe the various kinds of mathematical objects that can be associated to a manifold, and each one will allow us to do more and more things. It will be a surprisingly long time before we can measure distances between points! (Not until Section 3.)

You have met many manifolds in your education to date, even if you didn’t call them by name. Some simple examples in mathematics include Euclidean space , the sphere , and the torus . Some simple examples in physics include the configuration space and phase space that we use in classical mechanics and the state space of thermodynamics. As we progress, we will see how familiar ideas in these subjects can be expressed in a more formal language. Ultimately our goal is to explain how spacetime is a manifold and to understand the structures that live on it.

2.1.1 Topological Spaces

Even before we get to a manifold, there is some work to do in order to define the underlying object. What follows is the mathematical equivalent of reading a biography about an interesting person and having to spend the first 20 pages wading through a description of what their grandparents did for a living. This backstory will not be particularly useful for our needs and we include it here only for completeness. We’ll keep it down to one page.

Our backstory is called a topological space. Roughly speaking, this is a space in which each point can be viewed as living in a neighbourhood of other points, in a manner that allows us to define concepts such as continuity and convergence.

Definition: A topological space is a set of points, endowed with a topology . This is a collection of open subsets which obey:

-

i)

Both the set and the empty set are open subsets: and .

-

ii)

The intersection of a finite number of open sets is also an open set. So if and then .

-

iii)

The union of any number (possibly infinite) of open sets is also an open set. So if then .

Given a point , we say that is a neighbourhood of if . This concept leads us to our final requirement: we require that, given any two distinct points, there is a neighbourhood which contains one but not the other. In other words, for any with , there exists such that and and . Topological spaces which obey this criterion are called Hausdorff. It is like a magic ward to protect us against bad things happening.

An example of a good Hausdorff space is the real line, , with consisting of all open intervals , with , and their unions. An example of a non-Hausdorff space is any with .

Definition: One further definition (it won’t be our last). A homeomorphism between topological spaces and is a map which is

-

i)

Injective (or one-to-one): for , .

-

ii)

Surjective (or onto): , which means that for each there exists a such that .

Functions which are both injective and surjective are said to be bijective. This ensures that they have an inverse

-

iii)

Bicontinuous. This means that both the function and its inverse are continuous. To define a notion of continuity, we need to use the topology. We say that is continuous if, for all , .

There’s an animation of a donut morphing into a coffee mug and back that is often used to illustrate the idea of topology. If you want to be fancy, you can say that a donut is homeomorphic to a coffee mug.

2.1.2 Differentiable Manifolds

We now come to our main character: an -dimensional manifold is a space which, locally, looks like . Globally, the manifold may be more interesting than , but the idea is that we can patch together these local descriptions to get an understanding for the entire space.

Definition: An -dimensional differentiable manifold is a Hausdorff topological space such that

-

i)

is locally homeomorphic to . This means that for each , there is an open set such that and a homeomorphism with an open subset of .

-

ii)

Take two open subsets and that overlap, so that . We require that the corresponding maps and are compatible, meaning that the map is smooth (also known as infinitely differentiable or ), as is its inverse. This is depicted in Figure 14.

The maps are called charts and the collection of charts is called an atlas. You should think of each chart as providing a coordinate system to label the region of . The coordinate associated to is

We write the coordinate in shorthand as simply , with . Note that we use a superscript rather than a subscript: this simple choice of notation will prove useful as we go along.

If a point is a member of more than one subset then it may have a number of different coordinates associated to it. There’s nothing to be nervous about here: it’s entirely analogous to labelling a point using either Euclidean coordinate or polar coordinates.

The maps take us between different coordinate systems and are called transition functions. The compatibility condition is there to ensure that there is no inconsistency between these different coordinate systems.

Any manifold admits many different atlases. In particular, nothing stops us from adding another chart to the atlas, provided that it is compatible with all the others. Two atlases are said to be compatible if every chart in one is compatible with every chart in the other. In this case, we say that the two atlases define the same differentiable structure on the manifold.

Examples

Here are a few simple examples of differentiable manifolds:

-

•

: this looks locally like because it is . You only need a single chart with the usual Euclidean coordinates. Similarly, any open subset of is a manifold.

Figure 15: Two charts on a circle. The figures are subtly different! On the left, point is removed and . On the right, point is removed and . -

•

: The circle can be defined as a curve in with coordinates . Until now in our physics careers, we’ve been perfectly happy taking as the coordinate on . But this coordinate does not meet our requirements to be a chart because it is not an open set. This causes problems if we want to differentiate functions at ; to do so we need to take limits from both sides but there is no coordinate with a little less than zero.

To circumvent this, we need to use at least two charts to cover . For example, we could pick out two antipodal points, say and . We take the first chart to cover with the map defined by as shown in the left-hand of Figure 15. We take the second chart to cover with the map defined by as shown in the right-hand figure.

The two charts overlap on the upper and lower semicircles. The transition function is given by

The transition function isn’t defined at , corresponding to the point , nor at , corresponding to the point . Nonetheless, it is smooth on each of the two open intervals as required.

Figure 16: Two charts on a sphere. In the left-hand figure, we have removed the half-equator defined as with , shown in red. In right-figure, we have removed the half-equator with , again shown in red. -

•

: It will be useful to think of the sphere as the surface embedded in Euclidean . The familiar coordinates on the sphere are those inherited from spherical polar coordinates of , namely

(2.56) with and . But as with the circle described above, these are not open sets so will not do for our purpose. In fact, there are two distinct issues. If we focus on the equator at , then the coordinate parameterises a circle and suffers the same problem that we saw above. On top of this, at the north pole and south pole , the coordinate is not well defined, since the value of has already specified the point uniquely. This manifests itself on Earth by the fact that all time zones coincide at the North pole. It’s one of the reasons people don’t have business meetings there.

Once again, we can resolve these issues by introducing two charts covering different patches on . The first chart applies to the sphere with a line of longitude removed, defined by and , as shown in Figure 16. (Think of this as the dateline.) This means that neither the north nor south pole are included in the open set . On this open set, we define a map using the coordinates (2.56), now with and , so that we have a map to an open subset of .

We then define a second chart on a different open set , defined by , with the line and removed. Here we define the map using the coordinates

with and . Again this is a map to an open subset of . We have while, on the overlap , the transition functions and are smooth. (We haven’t written these functions down explicitly, but it’s clear that they are built from and functions acting on domains where their inverses exist.)

Note that for both and examples above, we made use of the fact that they can be viewed as embedded in a higher dimensional to construct the charts. However, this isn’t necessary. The definition of a manifold makes no mention of a higher dimensional embedding and these manifolds should be viewed as having an existence independent of any embedding.

As you can see, there is a level of pedantry involved in describing these charts. (Mathematicians prefer the word “rigour”.) The need to deal with multiple charts arises only when we have manifolds of non-trivial topology; the manifolds and that we met above are particularly simple examples. When we come to discuss general relativity, we will care a lot about changing coordinates, and the limitations of certain coordinate systems, but our manifolds will turn out to be simple enough that, for all practical purposes, we can always find a single set of coordinates that tells us what we need to know. However, as we progress in physics, and topology becomes more important, so too does the idea of different charts. Perhaps the first place in physics where overlapping charts become an integral part of the discussion is the construction of a magnetic monopole. (See the lectures on Gauge Theory.)

2.1.3 Maps Between Manifolds

The advantage of locally mapping a manifold to is that we can now import our knowledge of how to do maths on . For example, we know how to differentiate functions on , and what it means for functions to be smooth. This now translates directly into properties of functions defined over the manifold.

We say that a function is smooth, if the map is smooth for all charts .

Similarly, we say that a map between two manifolds and (which may have different dimensions) is smooth if the map is smooth for all charts and

A diffeomorphism is defined to be a smooth homeomorphism . In other words it is an invertible, smooth map between manifolds and that has a smooth inverse. If such a diffeomorphism exists then the manifolds and are said to be diffeomorphic. The existence of an inverse means and necessarily have the same dimension.

Manifolds which are homeomorphic can be continuously deformed into each other. But diffeomorphism is stronger: it requires that the map and its inverse are smooth. This gives rise to some curiosities. For example, it turns out that the sphere can be covered by a number of different, incompatible atlases. The resulting manifolds are homeomorphic but not diffeomorphic. These are referred to as exotic spheres. Similarly, Euclidean space has a unique differentiable structure, except for where there are an infinite number of inequivalent structures. I know of only one application of exotic spheres to physics (a subtle global gravitational anomaly in superstring theory) and I know of no applications of the exotic differential structure on . Certainly these will not play any role in these lectures.

2.2 Tangent Spaces

Our next task is to understand how to do calculus on manifolds. We start here with differentiation; it will take us a while longer to get to integration, which we will finally meet in Section 2.4.4.

Consider a function . To differentiate the function at some point , we introduce a chart in a neighbourhood of . We can then construct the map with . But we know how to differentiate functions on and this gives us a way to differentiate functions on , namely

| (2.57) |

Clearly this depends on the choice of chart and coordinates . We would like to give a coordinate independent definition of differentiation, and then understand what happens when we choose to describe this object using different coordinates.

2.2.1 Tangent Vectors

We will consider smooth functions over a manifold . We denote the set of all smooth functions as .

Definition: A tangent vector is an object that differentiates functions at a point . Specifically, satisfying

-

i)

Linearity: for all .

-

ii)

when is the constant function.

-

iii)

Leibnizarity: for all . This, of course, is the product rule.

Note that ii) and iii) combine to tell us that for .

This definition is one of the early surprises in differential geometry. The surprise is really in the name “tangent vector”. We know what vectors are from undergraduate physics, and we know what differential operators are. But we’re not used to equating the two. Before we move on, it might be useful to think about how this definition fits with other notions of vectors that we’ve met before.

The first time we meet a vector in physics is usually in the context of Newtonian mechanics, where we describe the position of a particle as a vector in . This concept of a vector is special to flat space and does not generalise to other manifolds. For example, a line connecting two points on a sphere is not a vector and, in general, there is no way to think of a point as a vector. So we should simply forget that points in can be thought of as vectors.

The next type of vector is the velocity of a particle, . This is more pertinent. It clearly involves differentiation and, moreover, is tangent to the curve traced out by the particle. As we will see below, velocities of particles are indeed examples of tangent vectors in differential geometry. More generally, tangent vectors tell us how things change in a given direction. They do this by differentiating.

It is simple to check that the object

which acts on functions as shown in (2.57) obeys all the requirements of a tangent vector.

Note that the index is now a subscript, rather than superscript that we used for the coordinates . (On the right-hand-side, the superscript in is in the denominator and counts as a subscript.) We will adopt the summation convention, where repeated indices are summed. But, as we will see, the placement of indices up or down will tell us something and all sums will necessarily have one index up and one index down. This is a convention that we met already in Special Relativity where the up/downness of the index changes minus signs. Here it has a more important role that we will see as we go on: the placement of the index tells us what kind of mathematical space the object lives in. For now, you should be aware that any equation with two repeated indices that are both up or both down is necessarily wrong, just as any equation with three or more repeated indices is wrong.

Theorem: The set of all tangent vectors at point forms an -dimensional vector space. We call this the tangent space . The tangent vectors provide a basis for . This means that we can write any tangent vector as

with the components of the tangent vector in this basis.

Proof: Much of the proof is just getting straight what objects live in what spaces. Indeed, getting this straight is a large part of the subject of differential geometry. To start, we need a small lemma. We define the function , with a chart on a neighbourhood of . Then, in some (perhaps smaller) neighbourhood of we can always write the function as

| (2.58) |

where we have introduced new functions and used the summation convention in the final term. If the function has a Taylor expansion then we can trivially write it in the form (2.58) by repackaging all the terms that are quadratic and higher into the functions, keeping a linear term out front. But in fact there’s no need to assume the existence of a Taylor expansion. One way to see this is to note that for any function we trivially have . But now apply this formula to the function for some fixed . This gives which is precisely (2.58) for a function of a single variable expanded about the origin. The same method holds more generally.

Given (2.58), we act with on both sides, and then evaluate at . This tells us that the functions must satisfy

| (2.59) |

We can translate this into a similar expression for itself. We define functions on by . Then, for any in the appropriate neighbourhood of , (2.58) becomes

But . So we find that, in the neighbourhood of , it is always possible to write a function as

for some . Note that, evaluated at , we have

where in the last equality we used (2.57) and in the penultimate equality we used (2.59).

Now we can turn to the tangent vector . This acts on the function to give

where we’ve dropped the arbitrary argument in , and ; these are the functions on which the tangent vector is acting. Using linearity and Leibnizarity, we have

The first term vanishes because is just a constant and all tangent vectors are vanishing when acting on a constant. The final term vanishes as well because the Leibniz rule tells us to evaluate the function at . Finally, by linearity, the middle term includes a term which vanishes because is just a constant. We’re left with

This means that the tangent vector can be written as

with as promised. To finish, we just need to show that provide a basis for . From above, they span the space. To check linear independence, suppose that we have vector . Then acting on , this gives . This concludes our proof.

Changing Coordinates

We have an ambivalent relationship with coordinates. We can’t calculate anything without them, but we don’t want to rely on them. The compromise we will come to is to consistently check that nothing physical depends on our choice of coordinates.

The key idea is that a given tangent vector exists independent of the choice of coordinate. However, the chosen basis clearly depends on our choice of coordinates: to define it we had to first introduce a chart and coordinates . A basis defined in this way is called, quite reasonably, a coordinate basis. At times we will work with other bases, which are not defined in this way. Unsurprisingly, these are referred to as non-coordinate bases. A particularly useful example of a non-coordinate basis, known as vielbeins, will be introduced in Section 3.4.2.

Suppose that we picked a different chart , with coordinates in the neighbourhood of . We then have two different bases, and can express the tangent vector in terms of either,

The vector is the same, but the components of the vector change: they are in the first set of coordinates, and in the second. It is straightforward to determine the relationship between and . To see this, we look at how the tangent vector acts on a function ,

where we’ve used the chain rule. (Actually, we’ve been a little quick here. You can be more careful by introducing the functions and and using (2.57) to write . The end result is the same. We will be similarly sloppy in the same way as we proceed, often conflating and .) You can read this equation in one of two different ways. First, we can view this as a change in the basis vectors: they are related as

| (2.60) |

Alternatively, we can view this as a change in the components of the vector, which transform as

| (2.61) |

Components of vectors that transform this way are sometimes said to be contravariant. I’ve always found this to be annoying terminology, in large part because I can never remember it. A more important point is that the form of (2.61) is essentially fixed once you remember that the index on sits up rather than down.

What Is It Tangent To?

So far, we haven’t really explained where the name “tangent vector” comes from. Consider a smooth curve in that passes through the point . This is a map , with an open interval . We will parameterise the curve as such that .

With a given chart, this curve becomes , parameterised by . Before we learned any differential geometry, we would say that the tangent vector to the curve at is

But we can take these to be the components of the tangent vector , which we define as

Our tangent vector now acts on functions . It is telling us how fast any function changes as we move along the curve.

Any tangent vector can be written in this form. This gives meaning to the term “tangent space” for . It is, literally, the space of all possible tangents to curves passing through the point . For example, a two dimensional manifold, embedded in is shown in Figure 17. At each point , we can identify a vector space which is the tangent plane: this is .

As an aside, note that the mathematical definition of a tangent space makes no reference to embedding the manifold in some higher dimensional space. The tangent space is an object intrinsic to the manifold itself. (This is in contrast to the picture where it was unfortunately necessary to think about the manifold as embedded in .)

The tangent spaces and at different points are different. There’s no sense in which we can add vectors from one to vectors from the other. In fact, at this stage there no way to even compare vectors in to vectors in . They are simply different spaces. As we proceed, we will make some effort to figure ways to get around this.

2.2.2 Vector Fields

So far we have only defined tangent vectors at a point . It is useful to consider an object in which there is a choice of tangent vector for every point . In physics, we call objects that vary over space fields.

A vector field is defined to be a smooth assignment of a tangent vector to each point . This means that if you feed a function to a vector field, then it spits back another function, which is the differentiation of the first. In symbols, a vector field is therefore a map . The function is defined by

The space of all vector fields on is denoted .

Given a coordinate basis, we can expand any vector field as

| (2.62) |

where the are now smooth functions on .

Strictly speaking, the expression (2.62) only defines a vector field on the open set covered by the chart, rather than the whole manifold. We may have to patch this together with other charts to cover all of .

The Commutator

Given two vector fields , we can’t multiply them together to get a new vector field. Roughly speaking, this is because the product is a second order differential operator rather than a first order operator. This reveals itself in a failure of Leibnizarity for the object ,

This is not the same as that Leibniz requires.

However, we can build a new vector field by taking the commutator , which acts on functions as

This is also known as the Lie bracket. Evaluated in a coordinate basis, the commutator is given by

This holds for all , so we’re at liberty to write

| (2.63) |

It is not difficult to check that the commutator obeys the Jacobi identity

This ensures that the set of all vector fields on a manifold has the mathematical structure of a Lie algebra.

2.2.3 Integral Curves

There is a slightly different way of thinking about vector fields on a manifold. A flow on is a one-parameter family of diffeomorphisms labelled by . These maps have the properties that is the identity map, and . These two requirements ensure that . Such a flow gives rise to streamlines on the manifold. We will further require that these streamlines are smooth.

We can then define a vector field by taking the tangent to the streamlines at each point. In a given coordinate system, the components of the vector field are

| (2.64) |

where I’ve abused notation a little and written rather than the more accurate but cumbersome . This will become a habit, with the coordinates often used to refer to the point .

A flow gives rise to a vector field. Alternatively, given a vector field , we can integrate the differential equation (2.64), subject to an initial condition to generate streamlines which start at . These streamlines are called integral curves, generated by .

|

|

In what follows, we will only need the infinitesimal flow generated by . This is simply

| (2.65) |

Indeed, differentiating this obeys (2.64) to leading order in .

(An aside: Given a vector field , it may not be possible to integrate (2.64) to generate a flow defined for all . For example, consider with the vector field . The equation , subject to the initial condition , has the unique solution which diverges at . Vector fields which generate a flow for all are called complete. It turns out that all vector fields on a manifold are complete if is compact. Roughly speaking, “compact” means that doesn’t “stretch to infinity”. More precisely, a topological space is compact if, for any family of open sets covering there always exists a finite sub-family which also cover . So is not compact because the family of sets covers but has no finite sub-family. Similarly, is non-compact. However, and are compact manifolds.)

We can look at some examples.

-

•

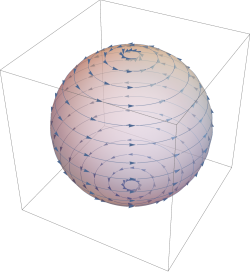

Consider the sphere in polar coordinates with the vector field . The integral curves solve the equation (2.64), which are

This has the solution and . The associated one-parameter diffeomorphism is , and the flow lines are simply lines of constant latitude on the sphere and are shown in the left-hand figure.

-

•

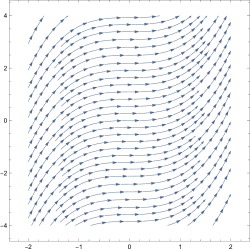

Alternatively, consider the vector field on with Cartesian components . The equation for the integral curves is now

which has the solution and . The associated flow lines are shown in the right-hand figure.

2.2.4 The Lie Derivative

So far we have learned how to differentiate a function. This requires us to introduce a vector field , and the new function can be viewed as the derivative of in the direction of .

Next we ask: is it possible to differentiate a vector field? Specifically, suppose that we have a second vector field . How can we differentiate this in the direction of to get a new vector field? As we’ve seen, we can’t just write down because this doesn’t define a new vector field.

To proceed, we should think more carefully about what differentiation means. For a function on , we compare the values of the function at nearby points, and see what happens as those points approach each other

Similarly, to differentiate a vector field, we need to subtract the tangent vector from the tangent vector at some nearby point , and then see what happens in the limit . But that’s problematic because, as we stressed above, the vector spaces and are different, and it makes no sense to subtract vectors in one from vectors in the other. To make progress, we’re going to have to find a way to do this. Fortunately, there is a way.

Push-Foward and Pull-Back

Suppose that we have a map between two manifolds and which we will take to be a diffeomorphism. This allows us to import various structures on one manifold to the other.

For example, if we have a function on , then we can construct a new function that we denote ,

Using the map in this way, to drag objects originally defined on onto is called the pull-back. If we introduce coordinates on and on , then the map , and we can write

Some objects more naturally go the other way. For example, given a vector field on , we can define a new vector field on . If we are given a function , then the vector field on acts as

where I’ve been a little sloppy in the notation here since the left-hand side is a function on and the right-hand side a function on . The equality above holds when evaluated at the appropriate points: . Using the map to push objects on onto is called the push-forward.

If is the vector field on , we can write the induced vector field on as

Written in components, , we then have

| (2.66) |

Given the way that the indices are contracted, this is more or less the only thing we could write down.

We’ll see other examples of these induced maps later in the lectures. The push-forward is always denoted as and goes in the same way as the original map. The pull-back is always denoted as and goes in the opposite direction to the original map. Importantly, if our map is a diffeomorphism, then we also have , so we can transport any object from to and back again with impunity.

Constructing the Lie Derivative

Now we can use these ideas to help build a derivative. Suppose that we are given a vector field on . This generates a flow , which is a map between manifolds, now with . This means that we can use (2.66) to generate a push-forward map from to . But this is exactly what we need if we want to compare tangent vectors at neighbouring points. The resulting differential operator is called the Lie derivative and is denoted .

It will turn out that we can use these ideas to differentiate many different kinds of objects. As a warm-up, let’s first see how an analogous construction allows us to differentiate functions. Now the function

But, using (2.64), we know that . We then have

| (2.67) |

In other words, acting on functions with the Lie derivative coincides with action of the vector field .

Now let’s look at the action of on a vector field . This is defined by

Note the minus sign in . This reflects that fact that vector fields are pushed, rather than pulled. The map takes us from the point to the point . But to push a tangent vector to a tangent vector in , where it can be compared to , we need to push with the inverse map . This is shown Figure 20.

Let’s first calculate the action of on a coordinate basis . We have

| (2.68) |

We have an expression for the push-forward of a tangent vector in (2.66), where the coordinates on should now be replaced by the infinitesimal change of coordinates induced by the flow which, from (2.65) is . Note the minus sign, which comes from the fact that we have to map back to where we came from as shown in Figure 20. We have, for small ,

Acting on a coordinate basis, we then have

| (2.69) |

To determine the action of on a general vector field , we use the fact that the Lie derivative obeys the usual properties that we expect of a derivative, including linearity, and Leibnizarity for any function , both of which follow from the definition. The action on a general vector field can then be written as

where we’ve simply viewed the components as functions. We can use (2.67) to determine and we’ve computed in (2.69). We then have

But this is precisely the structure of the commutator. We learn that the Lie derivative acting on vector fields is given by

A corollary of this is

| (2.70) |

which follows from the Jacobi identity for commutators.

The Lie derivative is just one of several derivatives that we will meet in this course. As we introduce new objects, we will learn how to act with on them. But we will also see that we can endow different meanings to the idea of differentiation. In fact, the Lie derivative will take something of a back seat until Section 4.3 when we will see that it is what we need to understand symmetries.

2.3 Tensors

For any vector space , the dual vector space is the space of all linear maps from to .

This is a standard mathematical construction, but even if you haven’t seen it before it should resonate with something you know from quantum mechanics. There we have states in a Hilbert space with kets and a dual Hilbert space with bras . Any bra can be viewed as a map defined by .

In general, suppose that we are given a basis of . Then we can introduce a dual basis for defined by

A general vector in can be written as and . Given a basis, this construction provides an isomorphism between and given by . But the isomorphism is basis dependent. Pick a different basis, and you’ll get a different map.

We can repeat the construction and consider , which is the space of all linear maps from to . But this space is naturally isomorphic to , meaning that the isomorphism is independent of the choice of basis. To see this, suppose that and . This means that . But we can equally as well view and define . In this sense, .

2.3.1 Covectors and One-Forms

At each point , we have a vector space . The dual of this space, is called the cotangent space at , and an element of this space is called a cotangent vector, sometimes shortened to covector. Given a basis of , we can introduce the dual basis for and expand any co-vector as .

We can also construct fields of cotangent vectors, by picking a member of for each point in a smooth manner. Such a cotangent field is better known as a one-form; they map vector fields to real numbers. The set of all one-forms on is denoted .

There is a particularly simple way to construct a one-form. Take a function and define by

| (2.71) |

We can use this method to build a basis for . If we introduce coordinates on with the corresponding coordinate basis of vector fields, which we often write in shorthand as . We then we simply take the functions which, from (2.71), gives

This means that provides a basis for , dual to the coordinate basis . In general, an arbitrary one-form can then be expanded as

In such a basis the one-form takes the form

As with vector fields, we can look at what happens if we change coordinates. Given two different charts, and , we know that the basis for vector fields changes as (2.60),

We should take the basis of one-forms to transform in the inverse manner,

| (2.73) |

This then ensures that

But this is just the multiplication of a matrix and its inverse,

So we find that

as it should. We can then expand a one-form in either of these two bases,

| (2.74) |

In the annoying terminology that I can never remember, components of vectors that transform this way are said to be covariant. Note that, as with vector fields, the placement of the indices means that (2.73) and (2.74) are pretty much the only things that you can write down that make sense.

2.3.2 The Lie Derivative Revisited

In Section 2.2.4, we explained how to construct the Lie derivative, which differentiates a vector field in the direction of a second vector field . This same idea can be adapted to one-forms.

Under a map , we saw that a vector field on can be pushed forwards to a vector field on . In contrast, one-forms go the other way: given a one-form on , we can pull this back to a one-form on , defined by

If we introduce coordinates on and on then the components of the pull-back are given by

| (2.75) |

We now define the Lie derivative acting on one-forms. Again, we use to generate a flow which, using the pull-back, allows us to compare one-forms at different points. We will denote the cotangent vector as . The Lie derivative of a one-form is then defined as

| (2.76) |

Note that we pull-back with the map . This is to be contrasted with (2.68) where we pushed forward the tangent vector with the map and, as we now show, this difference in minus sign manifests itself in the expression for the Lie derivative. The infinitesimal map acts on coordinates as so, from (2.75), the pull-back of a basis vector is

Acting on the coordinate basis, we then have

which indeed differs by a minus sign from the corresponding result (2.69) for tangent vectors. Acting on a general one-form , the Lie derivative is

| (2.77) | |||||

We’ll return to discuss one-forms (and other forms) more in Section 2.4.

2.3.3 Tensors and Tensor Fields

A tensor of rank at a point is defined to be a multi-linear map

Such a tensor is said to have total rank .

We’ve seen some examples already. A cotangent vector in is a tensor of type , while a tangent vector in is a tensor of type (using the fact that ).

As before, we define a tensor field to be a smooth assignment of an tensor to every point .

Given a basis for vector fields and a dual basis for one-forms, the components of the tensor are defined to be

Note that we deliberately write the string of lower indices after the upper indices. In some sense this is unnecessary, and we don’t lose any information by writing . Nonetheless, we’ll see later that it’s a useful habit to get into.

On a manifold of dimension , there are such components. For a tensor field, each of these is a function over .

As an example, consider a rank tensor. This takes two one-forms, say and , together with a vector field , and spits out a real number. In a given basis, this number is

Every manifold comes equipped with a natural tensor called . This takes a one-form and a vector field and spits out the real number

which is simply the Kronecker delta.

As with vector fields and one-forms, we can ask how the components of a tensor transform. We will work more generally than before. Consider two bases for the vector fields, and , not necessarily coordinate bases, related by

for some invertible matrix . The respective dual bases are and are then related by

such that

The lower components of a tensor then transform by multiplying by , and the upper components by multiplying by . So, for example, a rank tensor transforms as

| (2.78) |

When we change between coordinate bases, we have

You can check that this coincides with our previous results (2.61) and (2.74).

Operations on Tensor Fields

There are a number of operations that we can do on tensor fields to generate further tensors.

First, we can add and subtract tensors fields, or multiply them by functions. This is the statement that the set of tensors at a point forms a vector space.

Next, there is a way to multiply tensors together to give a tensor of a different type. Given a tensor of rank and a tensor of rank , we can form the tensor product, which a new tensor of rank , defined by

In terms of components, this reads

| (2.79) |

Given an tensor , we can also construct a tensor of lower rank by contraction. To do this, simply replace one of entries with a basis vector , and the corresponding entry with the dual vector and then sum over . So, for example, given a rank tensor we can construct a rank tensor by

Alternatively, we could construct a (typically) different tensor by contracting the other argument, . Written in terms of components, contraction simply means that we put an upper index equal to a lower index and sum over them,

Our next operation is symmetrisation and anti-symmetrisation. For example, given a tensor we decompose it into two tensors, in which the arguments are either symmetrised or anti-symmetrised,

In index notation, this becomes

which is just like taking the symmetric and anti-symmetric part of a matrix. We will work with these operations frequently enough to justify introducing some new notation. We define

These operations generalise to other tensors. For example,

We can also symmetrise or anti-symmetrise over multiple indices, provided that these indices are either all up or all down. If we (anti)-symmetrise over objects, then we divide by , which is the number of possible permutations. This normalisation ensures that if we start with a tensor which is already, say, symmetric then further symmetrising doesn’t affect it. In the case of anti-symmetry, we weight each term with the sign of the permutation. So, for example,

and

There will be times when, annoyingly, we will wish to symmetrise (or anti-symmetrise) over indices which are not adjacent. We introduce vertical bars to exclude certain indices from the symmetry procedure. So, for example,

Finally, given a smooth tensor field of any rank, we can always take the Lie derivative with respect to a vector field . As we’ve seen previously, under a map , vector fields are pushed forwards and one-forms are pulled-back. In general, this leaves a tensor of mixed type unsure where to go. However, if is a diffeomorphism then we also have and this allows us to define the push-forward of a tensor from to . This acts on one-forms and vector fields and is given by

Here are the pull-backs of from to , while are the push-forwards of from to .

The Lie derivative of a tensor along is then defined as

where is the flow generated by . This coincides with our earlier definitions for vector fields in (2.68) and for one-forms in (2.76). (The difference in the vs minus sign in (2.68) and (2.76) is now hiding in the inverse push-forward that appears in the definition .)

2.4 Differential Forms

Some tensors are more interesting than others. A particularly interesting class are totally anti-symmetric tensors fields. These are called -forms. The set of all -forms over a manifold is denoted .

We’ve met some forms before. A 0-form is simply a function. Meanwhile, as we saw previously, a 1-form is another name for a covector. The anti-symmetry means that we can’t have any form of degree . A -form has different components. Forms in are called top forms.

Given a -form and a -form , we can take the tensor product (2.79) to construct a -tensor. If we anti-symmetrise this, we then get a -form. This construction is called the wedge product, and is defined by

where the in the subscript tells us to anti-symmetrise over all indices. For example, given , we can construct a 2-form

For one forms, the anti-symmetry ensures that . In general, if and , then one can show that

This means that for any form of odd degree. We can, however, wedge even degree forms with themselves. (Which you know already for 0-forms where the wedge product is just multiplication of functions.)

As a more specific example, consider and and . We then have

Notice that the components that arise are precisely those of the cross-product acting on vectors in . This is no coincidence: what we usually think of as the cross-product between vectors is really a wedge product between forms. We’ll have to wait to Section 3 to understand how to map from one to the other.

It can also be shown that the wedge product is associative, meaning

We can then drop the brackets in any such product.

Given a basis of , a basis of can be constructed by wedge products . We will usually work with the coordinate basis . This means that any -form can be written locally as

| (2.80) |

Although locally any -form can be written as (2.80), this may not be true globally. This, and related issues, will become of some interest in Section 2.4.3.

2.4.1 The Exterior Derivative

We learned in Section 2.3.1 how to construct a one-form from a function . In a coordinate basis, this one-form has components (2.72),

We can extend this definition to higher forms. The exterior derivative is a map

In local coordinates (2.80), the exterior derivative acts as

| (2.81) |

Equivalently we have

| (2.82) |

Importantly, if we subsequently act with the exterior derivative again, we get

because the derivatives are anti-symmetrised and hence vanish. This holds true for any -form, a fact which is sometimes expressed as

It can be shown that the exterior derivative satisfies a number of further properties,

-

•

, where .

-

•

where is the pull-back associated to the map between manifolds,

-

•

Because the exterior derivative commutes with the pull-back, it also commutes with the Lie derivative. This ensures that we have .

A -form is said to be closed if everywhere. It is exact if everywhere for some . Because , an exact form is necessary closed. The question of when the converse is true is interesting: we’ll discuss this more in Section 2.4.3.

Examples

Suppose that we have a one-form , the exterior derivative gives a 2-form

As a specific example of this example, suppose that we take the one-form to live on , with

Since this is a field, each of the components is a function of , and . The exterior derivative is given by

Notice that there’s no term like because this would come with a .

In the olden days (before this course), we used to write vector fields in as and compute the curl . But the components of the curl are precisely the components that appear in . In fact, our “vector” was really a one-form and the curl turned it into a two-form. It’s a happy fact that in , vectors, one-forms and two-forms all have three components, which allowed us to conflate them in our earlier courses. (In fact, there is a natural map between them that we will meet in Section 3.)

Suppose instead that we start with a 2-form in , which we write as

Taking the exterior derivative now gives

| (2.84) | |||||

This time there is just a single component, but again it’s something familiar. Had we written the original three components of the two-form in old school vector notation , then the single component of is what we previously called .

The Lie Derivative Yet Again

There is yet another operation that we can construct on -forms. Given a vector field , we can construct the interior product, a map . If , we define a by

| (2.85) |

In other words, we just put in the first argument of . Acting on functions , we simply define .

The anti-symmetry of forms means that . Moreover, you can check that

where .

Consider a 1-form . There are two different ways to act with and to give us back a one-form. These are

and

Adding the two together gives

But this is exactly the same expression we saw previously when computing the Lie derivative (2.77) of a one-form. We learn that

| (2.86) |

This expression is sometimes referred to as Cartan’s magic formula. A similar calculation shows that (2.86) holds for any -form .

2.4.2 Forms You Know and Love

There are a number of examples of differential forms that you’ve met already, but likely never called them by name.

The Electromagnetic Field

The electromagnetic gauge field should really be thought of as the components of a one-form on spacetime . (Here I’ve set .) We write

Taking the exterior derivative yields a 2-form , given by

But this is precisely the field strength that we met in our lectures on Electromagnetism. The components are the electric and magnetic fields, arranged as

| (2.87) |

By construction, we also have . In this context, this is sometimes called the Bianchi identity; it yields two of the four Maxwell equations. In old school vector calculus notation, these are and . We need a little more structure to get the other two as we will see later in this chapter.

The gauge field is not unique. Given any function , we can always shift it by a gauge transformation

This leaves the field strength invariant because .

Phase Space and Hamilton’s Equations

In classical mechanics, the phase space is a manifold parameterised by coordinates where are the positions of particles and the momenta. Recall from our lectures on Classical Dynamics that the Hamiltonian is a function on , and Hamilton’s equations are

| (2.88) |

Phase space also comes with a structure called a Poisson bracket, defined on a pair of functions and as

Then the time evolution of any function can be written as

which reproduces Hamilton’s equations if we take or .

Underlying this story is the mathematical structure of forms. The key idea is that we have a manifold and a function on . We want a machinery that turns the function into a vector field . Particles then follow trajectories in phase space that are integral curves generated by .

To achieve this, we introduce a symplectic two-form on an even-dimensional manifold . This two form must be closed, , and non-degenerate, which means that the top form . We’ll see why we need these requirements as we go along. A manifold equipped with a symplectic two-form is called a symplectic manifold.

Any 2-form provides a map . Given a vector field , we can simply take the interior product with to get a one-form . However, we want to go the other way: given a function , we can always construct a one-form , and we’d like to exchange this for a vector field. We can do this if the map is actually an isomorphism, so the inverse exists. This turns out to be true provided that is non-degenerate. In this case, we can define the vector field by solving the equation

| (2.89) |

If we introduce coordinates on the manifold, then the component form of this equation is

We denote the inverse as such that . The components of the vector field are then

The integral curves generated by obey the differential equation (2.64)

These are the general form of Hamilton’s equations. They reduce to our earlier form (2.88) if we write and choose the symplectic form to have block diagonal form .

To define the Poisson structure, we first note that we can repeat the map (2.89) to turn any function into a vector field obeying . But we can then feed these vector fields back into the original 2-form . This gives us a Poisson bracket,

Or, in components,

There are many other ways to write this Poisson bracket structure in invariant form. For example, backtracking through various definitions we find

The equation of motion in Poisson bracket structure is then

which tells us that the Lie derivative along generates time evolution.

We haven’t yet explained why the symplectic two-form must be closed, . You can check that this is needed so that the Poisson bracket obeys the Jacobi identity. Alternatively, it ensures that the symplectic form itself is invariant under Hamiltonian flow, in the sense that . To see this, we use (2.86)

The second equality follows from the fact that . If we insist that then we find as promised.

Thermodynamics

The state space of a thermodynamic system is a manifold . For the ideal gas, this is a two-dimensional manifold with coordinates provided by, for example, the pressure and volume . More complicated systems can have a higher dimensional state space, but the dimension is always even since, like in classical mechanics, thermodynamical variables come in conjugate pairs.

When we first learn the laws of thermodynamics, we have to make up some strange new notation, , which then never rears its head again. For example, the first law of thermodynamics is written as

Here is the infinitesimal change of energy in the system. The first law of thermodynamics, as written above, states that this decomposes into the heat flowing into the system and the work done on the system .

Why the stupid notation? Well, the energy is a function over the state space and this means that we can write the change of the energy . But there is no such function or and, correspondingly, and are not exact differentials. Indeed, we have and later, after we introduce the second law, we learn that , with the temperature and the entropy, both of which are functions over .

This is much more natural in the language of forms. All of the terms in the first law are one-forms. But the transfer of heat, and the work are not exact one-forms and so can’t be written as . In contrast, is an exact one-form. That’s what the notation is really telling us: it’s the way of denoting non-exact one-forms before we had a notion of differential geometry.

The real purpose of the first law of thermodynamics is to define the energy functional . The century version of the statement is something like: “the amount of work required to change an isolated system is independent of how the work is performed”. A more modern rendering would be: “the sum of the work and heat is an exact one-form”.

2.4.3 A Sniff of de Rham Cohomology

The exterior derivative is a map which squares to zero, . It turns out that one can have a lot of fun with such maps. We will now explore a little bit of this fun.

First a repeat of definitions we met already: a -form is closed if everywhere. A -form is exact if everywhere for some . Because , exact implies closed. However, the converse is not necessarily true. It turns out that the way in which closed forms fail to be exact captures interesting facts about the topology of the underlying manifold.

We’ve met this kind of question before. In electromagnetism, we have a magnetic field which obeys . We then argue that this means we can write the magnetic field as . This is more properly expressed the language of forms. We we saw in the previous section, the magnetic field is really a 2-form

We computed the exterior derivative in (2.84); it is

We see that the Maxwell equation is really the statement that is a closed two-form, obeying . We also saw in (2.4) if we write for some one-form , then the components are given by . Clearly writing ensures that . But when is the converse true? We have the following statement (which we leave unproven)

Theorem (The Poincaré Lemma): On , closed implies exact.

Since we’ve spent a lot of time mapping manifolds to , this also has consequence for a general manifold . It means that if is a closed -form, then in any neighbourhood it is always possible to find a such that on . The catch is that it may not be possible to find such an everywhere on the manifold.

An Example

Consider the one-dimensional manifold . We can take a one-form . This is always closed because it is a top form. It is also exact. We introduce the function

Then .

Now consider the topologically more interesting one-dimensional manifold , which we can view as the phase . We can introduce the form on . The way its written makes it look like its an exact form, but this is an illusion because, as we stressed in Section 2.1, is not a good coordinate everywhere on because it’s not single valued. Indeed, it’s simple to see that there is no single-valued function on such that . So on , we can construct a form which, locally, can be written as but globally cannot be written as . So we have a form that is closed but not exact.

Another Example

On , the Poincaré lemma ensures that all closed forms are exact. However, things change if we remove a single point and consider . Consider the one-form defined by

This is not a smooth one-form on because of the divergence at the origin. But removing that point means that becomes acceptable. We can check that is closed,

where the follows from a little bit of algebra. is exact if we can find a function , defined everywhere on such that , which means

We can certainly integrate these equations; the result is

But this is not a smooth function everywhere on . This means that we can’t, in fact, write for a well defined function on . We learn that removing a point makes a big difference: now closed no longer implies exact.

There is a similar story for . Indeed, this is how magnetic monopoles sneak back into physics, despite being forbidden by the Maxwell equation . You can learn more about this in the lectures on Gauge Theory.

Betti Numbers

We denote the set of all closed -forms on a manifold as . Equivalently, is the kernel of the map .

We denote the set of all exact -forms on a manifold as . Equivalently, is the range of .

The de Rham cohomology group is defined to be

The quotient here is an equivalence class. Two closed forms are said to be equivalent if for some . We say that and sit in the same equivalence class . The cohomology group is the set of equivalence classes; in other words, it consists of closed forms mod exact forms.

The Betti numbers of a manifold are defined as

It turns out that these are always finite. The Betti number for any connected manifold. This can be traced to the existence of constant functions which are clearly closed but, because there are no forms, are not exact. The higher Betti numbers are non-zero only if the manifold has some interesting topology. Finally, the Euler character is defined as the alternating sum of Betti numbers,

| (2.90) |

Here are some simple examples. We’ve already seen that the circle has a closed, non-exact one-form. This means that and . The sphere has only and . The torus has and .

2.4.4 Integration

We have learned how to differentiate on manifolds by using a vector field . Now it is time to learn how to integrate. It turns out that the things that we integrate on manifolds are forms.

Integrating over Manifolds

To start, we need to orient ourselves. A volume form, or orientation on a manifold of dimension is a nowhere-vanishing top form . Any top form has just a single component and can be locally written as

where we require . If such a top form exists everywhere on the manifold, then is said to be orientable.

The orientation is called right-handed if everywhere, and left-handed if everywhere. Given one volume form , we can always construct another by multiplying by a function, giving where everywhere or everywhere.

It’s not enough to just write down a volume form with locally. We must also ensure that we can patch these volume forms together over the manifold, without the handedness changing. Suppose that we have two sets of coordinates, and that overlap on some region. In the new coordinates, the volume form is given by

which has the same orientation provided

| (2.91) |

Non-orientable manifolds cannot be covered by overlapping charts such that (2.91) holds. Examples include the Möbius strip and real projective space for even. (In contrast is orientable for odd, and is orientable for all .) In these lectures, we deal only with orientable manifolds.

Given a volume form on , we can integrate any function over the manifold. In a chart , with coordinates , we have

On the right-hand-side, we’re just doing normal integration over some part of . The volume form is playing the role of a measure, telling us how much to weight various parts of the integral. To integrate over the entire manifold, we divide the manifold up into different regions, each covered by a single chart. We then perform the integral over each region and sum the results.

Integrating over Submanifolds

We don’t have to integrate over the full manifold . We can integrate over some lower dimensional submanifold.

A manifold with dimension is a submanifold of if we can find a map which is one-to-one (which ensures that doesn’t intersect itself in ) and is one-to-one.

We can then integrate a -form on over a -dimensional submanifold . We do this by pulling back the -form to and writing

For example, suppose that we have a one-form living over . If is a one-dimensional manifold, the we can introduce a map which defines a non-intersecting, one-dimensional curve which is a submanifold of . We can then pull-back onto this curve and integrate to get

This probably looks more familiar in coordinates. If the curve traces out a path in , we have

But this is precisely the way the worldline of a particle couples to the electromagnetic field, as we previously saw in (1.21).

2.4.5 Stokes’ Theorem

Until now, we have considered only smooth manifolds. There is a slight generalisation that will be useful. We define a manifold with boundary in the same way as a manifold, except the charts map where is an open subset of . The boundary has co-dimension 1 and is denoted : it is the submanifold with coordinates .

Consider a manifold with boundary . If the dimension of the manifold is then for any -form , we have the following simple result

| (2.92) |

This is Stokes’ theorem.

We do not prove Stoke’s theorem here. The proof is fairly tedious, and does not differ greatly from the proofs of other things that you’ve called Stokes’ theorem (or Gauss’ divergence theorem) in the past. (See, for example, the lectures on Vector Calculus.) However, the wonderful thing about (2.92) is the way in which it unifies many results in a single elegant formula. To see this, we simply need to look at a few examples.

The Mother of all Integral Theorems

First, consider with the interval . We introduce coordinates on the interval. The 0-form is simply a function and . In this case, the two sides of Stokes’ theorem can be evaluated to give

Equating the two, we see that Stokes’ theorem is simply a restatement of the fundamental theorem of calculus.

Next, we take to be a manifold with boundary. We introduce a one-form with coordinates

In this case, the ingredients in Stokes’ theorem are

Equating the two gives the result usually referred to as Green’s theorem in the plane.

Finally, consider to be a manifold with boundary, with a 2-form

The right-hand-side of Stokes theorem is

Meanwhile, we computed the exterior derivative of a 2-form in (2.84). The left-hand-side of Stokes’ theorem then gives

This time, equating the two gives us Gauss’ divergence theorem.