Researcher: Hong Ye Tan

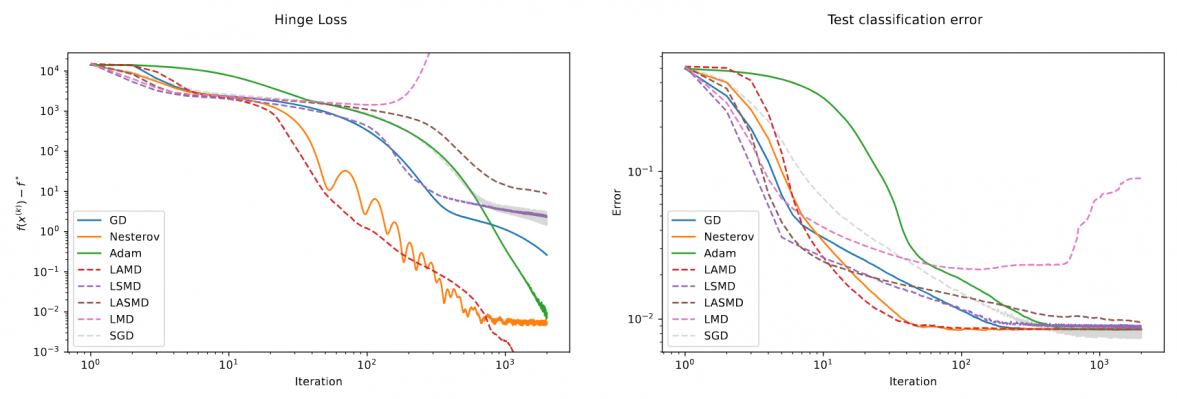

Learning-to-optimize (L2O) is an emerging research area in large-scale optimization with applications in data science. Recently, researchers have proposed a novel L2O framework called learned mirror descent (LMD), based on the classical mirror descent (MD) algorithm with learnable mirror maps parameterized by input-convex neural networks. The LMD approach has been shown to significantly accelerate convex solvers while inheriting the convergence properties of the classical MD algorithm. This work proposes several practical extensions of the LMD algorithm, addressing its instability, scalability, and feasibility for high-dimensional problems. We first propose accelerated and stochastic variants of LMD, leveraging classical momentum-based acceleration and stochastic optimization techniques for improving the convergence rate and per-iteration computational complexity. Moreover, for the particular application of training neural networks, we derive and propose a novel and efficient parameterization for the mirror potential, exploiting the equivariant structure of the training problems to significantly reduce the dimensionality of the underlying problem. We provide theoretical convergence guarantees for our schemes under standard assumptions and demonstrate their effectiveness in various computational imaging and machine learning applications such as image inpainting, and the training of support vector machines and deep neural networks.