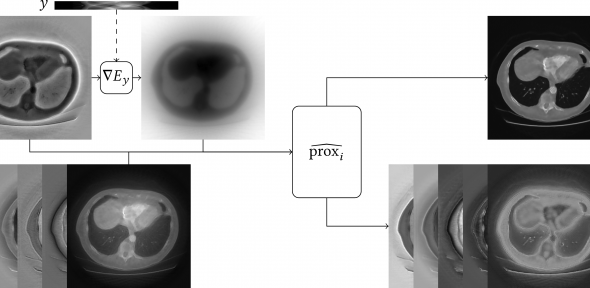

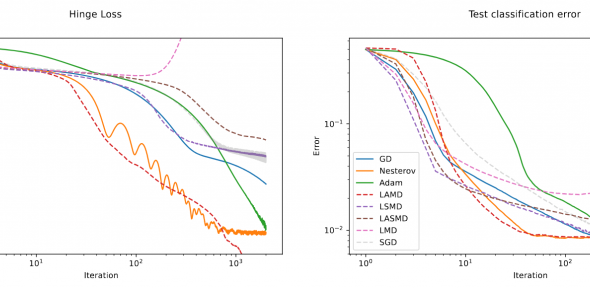

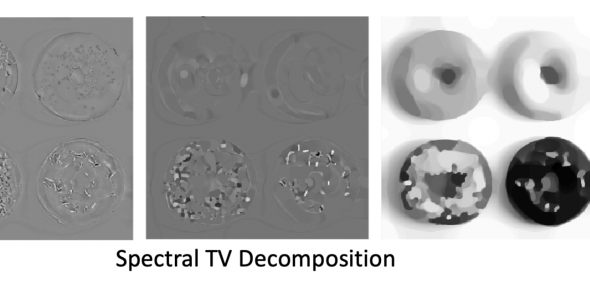

We study optimization in its broad sense. From mathematical foundations of optimization algorithms, to development of new methods to solve optimization problems (with and without machine learning), including proposing new frameworks to solve ML problems.