3 Quantum Gases

In this section we will discuss situations where quantum effects are important. We’ll still restrict attention to gases — meaning a bunch of particles moving around and barely interacting — but one of the first things we’ll see is how versatile the idea of a gas can be in the quantum world. We’ll use it to understand not just the traditional gases that we met in the previous section but also light and, ironically, certain properties of solids. In the latter part of this section, we will look at what happens to gases at low temperatures where their behaviour is dominated by quantum statistics.

3.1 Density of States

We start by introducing the important concept of the density of states. To illustrate this, we’ll return once again to the ideal gas trapped in a box with sides of length and volume . Viewed quantum mechanically, each particle is described by a wavefunction. We’ll impose periodic boundary conditions on this wavefunction (although none of the physics that we’ll discuss in this course will be sensitive to the choice of boundary condition). If there are no interactions between particles, the energy eigenstates are simply plane waves,

Boundary conditions require that the wavevector is quantized as

and the energy of the particle is

with . The quantum mechanical single particle partition function (1.21) is given by the sum over all energy eigenstates,

The question is: how do we do the sum? The simplest way is to approximate it by an integral. Recall from the previous section that the thermal wavelength of the particle is defined to be

The exponents that appear in the sum are all of the form , up to some constant factors. For any macroscopic size box, (a serious understatement! Actually ) which ensures that there are many states with all of which contribute to the sum. (There will be an exception to this at very low temperatures which will be the focus of Section 3.5.3). We therefore lose very little by approximating the sum by an integral. We can write the measure of this integral as

where, in the last equality, we have integrated over the angular directions to get , the area of the 2-sphere, leaving an integration over the magnitude and the Jacobian factor . For future applications, it will prove more useful to change integration variables at this stage. We work instead with the energy,

We can now write out integral as

| (3.90) |

where

| (3.91) |

is the density of states: counts the number of states with energy between and . Notice that we haven’t actually done the integral over in (3.90); instead this is to be viewed as a measure which we can integrate over any function of our choosing.

There is nothing particularly quantum mechanical about the density of states. Indeed, in the derivation above we have replaced the quantum sum with an integral over momenta which actually looks rather classical. Nonetheless, as we encounter more and more different types of gases, we’ll see that the density of states appears in all the calculations and it is a useful quantity to have at our disposal.

3.1.1 Relativistic Systems

Relativistic particles moving in spacetime dimensions have kinetic energy

| (3.92) |

Repeating the steps above, we find the density of states is given by

| (3.93) |

In particular, for massless particles, the density of states is

| (3.94) |

3.2 Photons: Blackbody Radiation

“It was an act of desperation. For six years I had struggled with the blackbody theory. I knew the problem was fundamental and I knew the answer. I had to find a theoretical explanation at any cost, except for the inviolability of the two laws of thermodynamics”

Max Planck

We now turn to our first truly quantum gas: light. We will consider a gas of photons — the quanta of the electromagnetic field — and determine a number of its properties, including the distribution of wavelengths. Or, in other words, its colour.

Below we will describe the colour of light at a fixed temperature. But this also applies (with a caveat) to the colour of any object at the same temperature. The argument for this is as follows: consider bathing the object inside the gas of photons. In equilibrium, the object sits at the same temperature as the photons, emitting as many photons as it absorbs. The colour of the object will therefore mimic that of the surrounding light.

For a topic that’s all about colour, a gas of photons is usually given a rather bland name — blackbody radiation. The reason for this is that any real object will exhibit absorption and emission lines due to its particular atomic make-up (this is the caveat mentioned above). We’re not interested in these details; we only wish to compute the spectrum of photons that a body emits because it’s hot. For this reason, one sometimes talks about an idealised body that absorbs photons of any wavelength and reflects none. At zero temperature, such an object would appear black: this is the blackbody of the title. We would like to understand its colour as we turn up the heat.

To begin, we need some facts about photons. The energy of a photon is determined by its wavelength or, equivalently, by its frequency to be

This is a special case of the relativistic energy formula (3.92) for massless particles, . The frequency is related to the (magnitude of the) wavevector by .

Photons have two polarization states (one for each dimension transverse to the direction of propagation). To account for this, the density of states (3.94) should be multiplied by a factor of two. The number of states available to a single photon with energy between and is therefore

Equivalently, the number of states available to a single photon with frequency between and is

| (3.95) |

where we’ve indulged in a slight abuse of notation since is not the same function as but is instead defined by the equation above. It is also worth pointing out an easy mistake to make when performing these kinds of manipulations with densities of states: you need to remember to rescale the interval to . This is most simply achieved by writing as we have above. If you miss this then you’ll get wrong by a factor of .

The final fact that we need is important: photons are not conserved. If you put six atoms in a box then they will still be there when you come back a month later. This isn’t true for photons. There’s no reason that the walls of the box can’t absorb one photon and then emit two. The number of photons in the world is not fixed. To demonstrate this, you simply need to turn off the light.

Because photon number is not conserved, we’re unable to define a chemical potential for photons. Indeed, even in the canonical ensemble we must already sum over states with different numbers of photons because these are all “accessible states”. (It is sometimes stated that we should work in the grand canonical ensemble at which is basically the same thing). This means that we should consider states with any number of photons.

We’ll start by looking at photons with a definite frequency . A state with such photons has energy . Summing over all gives us the partition function for photons at fixed frequency,

| (3.96) |

We now need to sum over all possible frequencies. As we’ve seen a number of times, independent partition functions multiply, which means that the s add. We only need to know how many photon states there are with some frequency . But this is what the density of states (3.95) tells us. We have

| (3.97) |

3.2.1 Planck Distribution

From the partition function (3.97) we can calculate all interesting quantities for a gas of light. For example, the energy density stored in the photon gas is

| (3.98) |

However, before we do the integral over frequency, there’s some important information contained in the integrand itself: it tells us the amount of energy carried by photons with frequency between and

| (3.99) |

This is the Planck distribution. It is plotted above for various temperatures. As you can see from the graph, for hot gases the maximum in the distribution occurs at a lower wavelength or, equivalently, at a higher frequency. We can easily determine where this maximum occurs by finding the solution to . It is

where solves . The equation above is often called Wien’s displacement law. Roughly speaking, it tells you the colour of a hot object.

To compute the total energy in the gas of photons, we need to do the integration in (3.98). To highlight how the total energy depends on temperature, it is useful to perform the rescaling , to get

The integral is tricky but doable. It turns out to be . (We will effectively prove this fact later in the course when we consider a more general class of integrals (3.116) which can be manipulated into the sum (3.117). The net result of this is to express the integral above in terms of the Gamma function and the Riemann zeta function: ). We learn that the energy density in a gas of photons scales is proportional to ,

Stefan-Boltzmann Law

The expression for the energy density above is closely related to the Stefan-Boltzmann law which describes the energy emitted by an object at temperature . That energy flux is defined as the rate of transfer of energy from the surface per unit area. It is given by

| (3.100) |

where

is the Stefan constant.

The factor of the speed of light in the middle equation of (3.100) appears because the flux is the rate of transfer of energy. The factor of comes because we’re not considering the flux emitted by a point source, but rather by an actual object whose size is bigger than the wavelength of individual photons. This means that the photon are only emitted in one direction: away from the object, not into it. Moreover, we only care about the velocity perpendicular to the object, which is where is the angle the photon makes with the normal. This means that rather than filling out a sphere of area surrounding the object, the actual flux of photons from any point on the object’s surface is given by

Radiation Pressure and Other Stuff

All other quantities of interest can be computed from the free energy,

We can remove the logarithm through an integration by parts to get,

From this we can compute the pressure due to electromagnetic radiation,

This is the equation of state for a gas of photons. The middle equation tells us that the pressure of photons is one third of the energy density — a fact which will be important in the Cosmology course.

We can also calculate the entropy and heat capacity . They are both most conveniently expressed in terms of the Stefan constant which hides most of the annoying factors,

3.2.2 The Cosmic Microwave Background Radiation

The cosmic microwave background, or CMB, is the afterglow of the big bang, a uniform light that fills the Universe. The intensity of this light was measured accurately by the FIRAS (far infrared absolute spectrophotometer) instrument on the COBE satellite in the early 1990s. The result is shown on the right, together with the theoretical curve for a blackbody spectrum at . It may look as if the error bars are large, but this is only because they have been multiplied by a factor of 400. If the error bars were drawn at the correct size, you wouldn’t be able to to see them.

This result is totally astonishing. The light has been traveling for 13.7 billion years, almost since the beginning of time itself. And yet we can understand it with ridiculous accuracy using such a simple calculation. If you’re not awed by this graph then you have no soul. You can learn more about this in the lectures on cosmology.

3.2.3 The Birth of Quantum Mechanics

The key conceptual input in the derivation of Planck’s formula (3.99) is that light of frequency comes in packets of energy . Historically, this was the first time that the idea of quanta arose in theoretical physics.

Let’s see what would happen in a classical world where light of frequency can have arbitrarily low intensity and, correspondingly, arbitrarily low energy. This is effectively what happens in the regime of the Planck distribution where the minimum energy is completely swamped by the temperature. There we can approximate

and Planck’s distribution formula (3.99) reduces to

Notice that all hints of the quantum have vanished. This is the Rayleigh-Jeans law for the distribution of classical radiation. It has a serious problem if we try to extrapolate it to high frequencies since the total energy, , diverges. This was referred to as the ultra-violet catastrophe. In contrast, in Planck’s formula (3.99) there is an exponential suppression at high frequencies. This arises because when , the temperature is not high enough to create even a single photon. By imposing a minimum energy on these high frequency modes, quantum mechanics ensures that they become frozen out.

3.2.4 Max Planck (1858-1947)

“A new scientific truth does not triumph by convincing its opponents and making them see the light, but rather because its opponents eventually die”

Max Planck

Planck was educated in Munich and, after a brief spell in Kiel, moved to take a professorship in Berlin. (The same position that Boltzmann had failed to turn up for).

For much of his early career, Planck was adamantly against the idea of atoms. In his view the laws of thermodynamics were inviolable. He could not accept that they sat on a foundation of probability. In 1882, he wrote “atomic theory, despite its great successes, will ultimately have to be abandoned”.

Twenty years later, Planck had changed his mind. In 1900, he applied Boltzmann’s statistical methods to photons to arrive at the result we derived above, providing the first hints of quantum mechanics. However, the key element of the derivation — that light comes in quanta — was not emphasised by Planck. Later, when quantum theory was developed, Planck refused once more to accept the idea of probability underlying physics. This time he did not change his mind.

3.3 Phonons

It is hard to imagine substances much more different than a gas and a solid. It is therefore quite surprising that we can employ our study of gases to accurately understand certain properties of solids. Consider a crystal of atoms of the type shown in the figure. The individual atoms are stuck fast in position: they certainly don’t act like a gas. But the vibrations of the atoms — in other words, sound waves — can be treated using the same formalism that we introduced for photons.

3.3.1 The Debye Model

Quantum mechanics turns electromagnetic waves into discrete packets of energy called photons. In exactly the same way, sounds waves in solids also come in discrete packets. They are called phonons. We’ll take the energy of a phonon to again be of the form

| (3.101) |

where is now the speed of sound rather than the speed of light.

The density of states for phonons is the same as that of photons (3.95) with two exceptions: we must replace the speed of light with the speed of sound ; and phonons have three polarization states rather than two. There are two transverse polarizations (like the photon) but also a longitudinal mode. The density of states is therefore

There is one further important difference between phonons and photons. While light waves can have arbitrarily high frequency, sound waves cannot. This is because high frequency waves have small wavelengths, . But there is a minimum wavelength in the problem set by the spacing between atoms. it is not possible for sound waves to propagate through a solid with wavelength smaller than the atomic spacing because there’s nothing in the middle there to shake.

We will denote the maximum allowed phonon frequency as . The minimum wavelength, , should be somewhere around the lattice spacing between atoms, which is , so we expect that . But how can we work out the coefficient? There is a rather clever argument to determine due to Debye. (So clever that he gets his initial on the frequency and his name on the section heading). We start by counting the number of single phonon states,

The clever bit of the argument is to identify this with the number of degrees of freedom in the solid. This isn’t immediately obvious. The number of degrees of freedom in a lattice of atoms is since each atom can move in three directions. But the number of single phonon states is counting collective vibrations of all the atoms together. Why should they be equal?

To really see what’s going on, one should compute the correct energy eigenstates of the lattice and just count the number of single phonon modes with wavevectors inside the first Brillouin zone. (You can learn about Brillouin zones in the Applications of Quantum Mechanics lecture notes.) But to get the correct intuition, we can think in the following way: in general the solid will have many phonons inside it and each of these phonons could sit in any one of the single-phonon states that we counted above. Suppose that there are three phonons sitting in the same state. We say that this state is occupied three times. In this language, each of the states above can be occupied an arbitrary number of times, restricted only by the energy available. If you want to describe the total state of the system, you need to say how many phonons are in the first state, and how many are in the second state and so on. The number of one-phonon states is then playing the role of the number of degrees of freedom: it is the number of things you can excite.

The net result of this argument is to equate

We see that is related to the atomic spacing as we anticipated above, but now we have the coefficient too. However, in some sense, the argument of Debye is “answer analysis”. It was constructed to ensure that we have the right high-temperature behaviour that we will see below.

From the maximum frequency we can construct an associated energy scale, , and temperature scale,

This is known as the Debye temperature. It provides a way of characterising all solids: it is the temperature at which the highest frequency phonon starts to become excited. ranges from around for very soft materials such as lead through to for hard materials such as diamond. Most materials have Debye temperatures around room temperature ( or so).

Heat Capacity of Solids

All that remains is to put the pieces together. Like photons, the number of phonons is not conserved. The partition function for phonons of a fixed frequency, , is the same as for photons (3.96),

Summing over all frequencies, the partition function is then

where the partition function for a single phonon of frequency is the same as that of a photon (3.96). The total energy in sound waves is therefore

We again rescale the integration variable to so the upper limit of the integral becomes . Then we have

The integral is a function of . It has no analytic expression. However, we can look in the two extremes. Firstly, for we can replace the upper limit of the integral by infinity. We’re then left with the same definite integral that we appeared for photons, . In this low-temperature regime, the heat capacity is proportional to ,

| (3.102) |

It is often expressed in terms of the Debye temperature , so it reads

| (3.103) |

In contrast, at temperatures we only integrate over small values of , allowing us to Taylor expand the integrand,

This ensures that the energy grows linearly with and the heat capacity tends towards a constant value

| (3.104) |

This high-temperature behaviour has been known experimentally since the early 1800’s. It is called the Dulong-Petit law. Debye’s argument for the value of was basically constructed to reproduce the coefficient in the formula above. This was known experimentally, but also from an earlier model of vibrations in a solid due to Einstein. (You met the Einstein model in the first problem sheet). Historically, the real success of the Debye model was the correct prediction of the behaviour of at low temperatures.

In most materials the heat capacity is dominated by the phonon contribution. (In metals there is an additional contribution from conduction electrons that we will calculate in Section 3.6). The heat capacity of three materials is shown in Figure 16, together with the predictions from the Debye model. As you can see, it works very well! The deviation from theory at high temperatures is due to differences between and , the heat capacity at constant pressure.

What’s Wrong with the Debye Model?

As we’ve seen, the Debye model is remarkably accurate in capturing the heat capacities of solids. Nonetheless, it is a caricature of the physics. The most glaring problem is our starting point (3.101). The relationship between energy and frequency is fine; the mistake is the relationship between frequency and wavevector (or momentum) , namely . Equations of this type, relating energy and momentum, are called dispersion relations. It turns out that that the dispersion relation for phonons is a little more complicated.

It is not hard to compute the dispersion relation for phonons. (You will, in fact, do this calculation in the Applications of Quantum Mechanics course). For simplicity, we’ll work with a one dimensional periodic lattice of atoms as shown in the figure. The equilibrium position of each atom is and we impose periodic boundary conditions by insisting that . Let be the deviation from equilibrium, . If we approximate the bonds joining the atoms as harmonic oscillators, the Hamiltonian governing the vibrations is

where is a parameter governing the strength of the bonds between atoms. The equation of motion is

This is easily solved by the discrete Fourier transform. We make the ansatz

Plugging this into the equation of motion gives the dispersion relation

To compute the partition function correctly in this model, we would have to revisit the density of states using the new dispersion relation . The resulting integrals are messy. However, at low temperatures only the smallest frequency modes are excited and, for small , the function is approximately linear. This means that we get back to the dispersion relation that we used in the Debye model, , with the speed of sound given by . Moreover, at very high temperatures it is simple to check that this model gives the Dulong-Petit law as expected. It deviates from the Debye model only at intermediate temperatures and, even here, this deviation is mostly negligible.

3.4 The Diatomic Gas Revisited

With a bit of quantum experience under our belt, we can look again at the diatomic gas that we discussed in Section 2.4. Recall that the classical prediction for the heat capacity — — only agrees with experiment at very high temperatures. Instead, the data suggests that as the temperature is lowered, the vibrational modes and the rotational modes become frozen out. But this is exactly the kind of behaviour that we expect for a quantum system where there is a minimum energy necessary to excite each degree of freedom. Indeed, this “freezing out” of modes saved us from ultra-violet catastrophe in the case of blackbody radiation and gave rise to a reduced heat capacity at low temperatures for phonons.

Let’s start with the rotational modes, described by the Hamiltonian (2.66). Treating this as a quantum Hamiltonian, it has energy levels

The degeneracy of each energy level is . Thus the rotational partition function for a single molecule is

When , we can approximate the sum by the integral to get

which agrees with our result for the classical partition function (2.67).

In contrast, for all states apart from effectively decouple and we have simply . At these temperatures, the rotational modes are frozen at temperatures accessible in experiment so only the translational modes contribute to the heat capacity.

This analysis also explains why there is no rotational contribution to the heat capacity of a monatomic gas. One could try to argue this away by saying that atoms are point particles and so can’t rotate. But this simply isn’t true. The correct argument is that the moment of inertia of an atom is very small and the rotational modes are frozen. Similar remarks apply to rotation about the symmetry axis of a diatomic molecule.

The vibrational modes are described by the harmonic oscillator. You already computed the partition function for this on the first examples sheet (and, in fact, implicitly in the photon and phonon calculations above). The energies are

and the partition function is

At high temperatures , we can approximate the partition function as which again agrees with the classical result (2.68). At low temperatures , the partition function becomes . This is a contribution from the zero-point energy of the harmonic oscillator. It merely gives the expected additive constant to the energy per particle,

and doesn’t contribute the heat capacity. Once again, we see how quantum effects explain the observed behaviour of the heat capacity of the diatomic gas. The end result is a graph that looks like that shown in Figure 11.

3.5 Bosons

For the final two topics of this section, we will return again to the simple monatomic ideal gas. The classical treatment that we described in Section 2.2 has limitations. As the temperature decreases, the thermal de Broglie wavelength,

gets larger. Eventually it becomes comparable to the inter-particle separation, . At this point, quantum effects become important. If the particles are non-interacting, there is really only one important effect that we need to consider: quantum statistics.

Recall that in quantum mechanics, particles come in two classes: bosons and fermions. Which class a given particle falls into is determined by its spin, courtesy of the spin-statistics theorem. Integer spin particles are bosons. This means that any wavefunction must be symmetric under the exchange of two particles,

Particles with -integer spin are fermions. They have an anti-symmetrized wavefunction,

At low temperatures, the behaviour of bosons and fermions is very different. All familiar fundamental particles such as the electron, proton and neutron are fermions. But an atom that contains an even number of fermions acts as a boson as long as we do not reach energies large enough to dislodge the constituent particles from their bound state. Similarly, an atom consisting of an odd number of electrons, protons and neutrons will be a fermion. (In fact, the proton and neutron themselves are not fundamental: they are fermions because they contain three constituent quarks, each of which is a fermion. If the laws of physics were different so that four quarks formed a bound state rather than three, then both the proton and neutron would be bosons and, as we will see in the next two sections, nuclei would not exist!).

We will begin by describing the properties of bosons and then turn to a discussion of fermions to Section 3.6.

3.5.1 Bose-Einstein Distribution

We’ll change notation slightly from earlier sections and label the single particle quantum states of the system by . (We used previously, but will be otherwise occupied for most of this section). The single particle energies are then and we’ll assume that our particles are non-interacting. In that case, you might think that to specify the state of the whole system, you would need to say which state particle 1 is in, and which state particle 2 is in, and so on. But this is actually too much information because particle 1 and particle 2 are indistinguishable. To specify the state of the whole system, we don’t need to attach labels to each particle. Instead, it will suffice to say how many particles are in state 1 and how many particles are in state 2 and so on.

We’ll denote the number of particles in state as . If we choose to work in the canonical ensemble, we must compute the partition function,

where the sum is over all possible ways of partitioning particles into sets subject to the constraint that . Unfortunately, the need to impose this constraint makes the sums tricky. This means that the canonical ensemble is rather awkward when discussing indistinguishable particles. It turns out to be much easier to work in the grand canonical ensemble where we introduce a chemical potential and allow the total number of particles to fluctuate.

Life is simplest it we think of each state in turn. In the grand canonical ensemble, a given state can be populated by an arbitrary number of particles. The grand partition function for this state is

Notice that we’ve implicitly assumed that the sum above converges, which is true only if . But this should be true for all states . We will set the ground state to have energy , so the grand partition function for a Bose gas only makes sense if

| (3.105) |

Now we use the fact that all the occupation of one state is independent of any other. The full grand partition function is then

From this we can compute the average number of particles,

Here denotes the average number of particles in the state ,

| (3.106) |

This is the Bose-Einstein distribution. In what follows we will always be interested in the thermodynamic limit where fluctuations around the average are negligible. For this reason, we will allow ourselves to be a little sloppy in notation and we write the average number of particles in as instead of .

Notice that we’ve seen expressions of the form (3.106) already in the calculations for photons and phonons — see, for example, equation (3.96). This isn’t coincidence: both photons and phonons are bosons and the origin of this term in both calculations is the same: it arises because we sum over the number of particles in a given state rather than summing over the states for a single particle. As we mentioned in the Section on blackbody radiation, it is not really correct to think of photons or phonons in the grand canonical ensemble because their particle number is not conserved. Nonetheless, the equations are formally equivalent if one just sets .

In what follows, it will save ink if we introduce the fugacity

| (3.107) |

Since , we have .

Ideal Bose Gas

Let’s look again at a gas of non-relativistic particles, now through the eyes of quantum mechanics. The energy is

As explained in Section 3.1, we can replace the sum over discrete momenta with an integral over energies as long as we correctly account for the density of states. This was computed in (3.91) and we reproduce the result below:

| (3.108) |

From this, together with the Bose-Einstein distribution, we can easily compute the total number of particles in the gas,

| (3.109) |

There is an obvious, but important, point to be made about this equation. If we can do the integral (and we will shortly) we will have an expression for the number of particles in terms of the chemical potential and temperature: . That means that if we keep the chemical potential fixed and vary the temperature, then will change. But in most experimental situations, is fixed and we’re working in the grand canonical ensemble only because it is mathematically simpler. But nothing is for free. The price we pay is that we will have to invert equation (3.109) to get it in the form . Then when we change , keeping fixed, will change too. We have already seen an example of this in the ideal gas where the chemical potential is given by (2.62).

The average energy of the Bose gas is,

| (3.110) |

And, finally, we can compute the pressure. In the grand canonical ensemble this is,

We can manipulate this last expression using an integration by parts. Because , this becomes

| (3.111) |

This is implicitly the equation of state. But we still have a bit of work to do. Equation (3.110) gives us the energy as a function of and . And, by the time we have inverted (3.109), it gives us as a function of and . Substituting both of these into (3.111) will give the equation of state. We just need to do the integrals…

3.5.2 A High Temperature Quantum Gas is (Almost) Classical

Unfortunately, the integrals (3.109) and (3.110) look pretty fierce. Shortly, we will start to understand some of their properties, but first we look at a particularly simple limit. We will expand the integrals (3.109), (3.110) and (3.111) in the limit . We’ll figure out the meaning of this expansion shortly (although there’s a clue in the title of this section if you’re impatient). Let’s look at the particle density (3.109),

where we made the simple substitution . The integrals are all of the Gaussian type and can be easily evaluated by making one further substitution . The final answer can be conveniently expressed in terms of the thermal wavelength ,

| (3.112) |

Now we’ve got the answer, we can ask what we’ve done! What kind of expansion is ? From the above expression, we see that the expansion is consistent only if , which means that the thermal wavelength is much less than the interparticle spacing. But this is true at high temperatures: the expansion is a high temperature expansion.

At first glance, it is surprising that corresponds to high temperatures. When , so naively it looks as if . But this is too naive. If we keep the particle number fixed in (3.109) then must vary as we change the temperature, and it turns out that faster than . To see this, notice that to leading order we need to be constant, so .

High Temperature Equation of State of a Bose Gas

We now wish to compute the equation of state. We know from (3.111) that . So we need to compute using the same expansion as above. From (3.110), the energy density is

| (3.113) | |||||

The next part’s a little fiddly. We want to eliminate from the expression above in favour of . To do this we invert (3.112), remembering that we’re working in the limit and . This gives

which we then substitute into (3.113) to get

and finally we substitute this into to get the equation of state of an ideal Bose gas at high temperatures,

| (3.114) |

which reproduces the classical ideal gas, together with a term that we can identify as the second virial coefficient in the expansion (2.69). However, this correction to the pressure hasn’t arisen from any interactions among the atoms: it is solely due to quantum statistics. We see that the effect of bosonic statistics on the high temperature gas is to reduce the pressure.

3.5.3 Bose-Einstein Condensation

We now turn to the more interesting question: what happens to a Bose gas at low temperatures? Recall that for convergence of the grand partition function we require so that . Since the high temperature limit is , we’ll anticipate that quantum effects and low temperatures come about as .

Recall that the number density is given by (3.109) which we write as

| (3.115) |

where the function is one of a class of functions which appear in the story of Bose gases,

| (3.116) |

These functions are also known as polylogarithms and sometimes denoted as . The function is relevant for the particle number and the function appears in the calculation of the energy in (3.110). For reference, the gamma function has value . (Do not confuse these functions with the density of states . They are not related; they just share a similar name).

In Section 3.5.2 we looked at (3.115) in the limit. There we saw that but the function in just the right way to compensate and keep fixed. What now happens in the other limit, and . One might harbour the hope that and again everything balances nicely with remaining constant. We will now show that this can’t happen.

A couple of manipulations reveals that the integral (3.116) can be expressed in terms of a sum,

But the integral that appears in the last line above is nothing but the definition of the gamma function . This means that we can write

| (3.117) |

We see that is a monotonically increasing function of . Moreover, at , it is equal to the Riemann zeta function

For our particular problem it will be useful to know that .

Let’s now return to our story. As we decrease in (3.115), keeping fixed, and hence must both increase. But can’t take values greater than 1. When we reach , let’s denote the temperature as . We can determine by setting in equation (3.115),

| (3.118) |

What happens if we try to decrease the temperature below ? Taken at face value, equation (3.115) tells us that the number of particles should decrease. But that’s a stupid conclusion! It says that we can make particles disappear by making them cold. Where did they go? We may be working in the grand canonical ensemble, but in the thermodynamic limit we expect which is too small to explain our missing particles. Where did we misplace them? It looks as if we made a mistake in the calculation.

In fact, we did make a mistake in the calculation. It happened right at the beginning. Recall that back in Section 3.1 we replaced the sum over states with an integral over energies,

Because of the weight in the integrand, the ground state with doesn’t contribute to the integral. We can use the Bose-Einstein distribution (3.106) to compute the number of states that we expect to be missing because they sit in this ground state,

| (3.119) |

For most values of , there are just a handful of particles sitting in this lowest state and it doesn’t matter if we miss them. But as gets very very close to 1 (meaning ) then we get a macroscopic number of particles occupying the ground state.

It is a simple matter to redo our calculation taking into account the particles in the ground state. Equation (3.115) is replaced by

Now there’s no problem keeping fixed as we take close to 1 because the additional term diverges. This means that if we have finite , then as we decrease we can never get to . Instead, must level out around as .

For , the number of particles sitting in the ground state is . Some simple algebra allows us to determine that fraction of particles in the ground state is

| (3.120) |

At temperatures , a macroscopic number of atoms discard their individual identities and merge into a communist collective, a single quantum state so large that it can be seen with the naked eye. This is known as the Bose-Einstein condensate. It provides an exquisitely precise playground in which many quantum phenomena can be tested.

Bose-Einstein Condensates in the Lab

Bose-Einstein condensates (often shortened to BECs) of weakly interacting atoms were finally created in 1995, some 70 years after they were first predicted. These first BECs were formed of Rubidium, Sodium or Lithium and contained between atoms. The transition temperatures needed to create these condensates are extraordinarily small, around . Figure 19 shows the iconic colour enhanced plots that reveal the existence of the condensate. To create these plots, the atoms are stored in a magnetic trap which is then turned off. A picture is taken of the atom cloud a short time later when the atoms have travelled a distance . The grey UFO-like smudges above are the original pictures. From the spread of atoms, the momentum distribution of the cloud is inferred and this is what is shown in Figure 19. The peak that appears in the last two plots reveals that a substantial number of atoms were indeed sitting in the momentum ground state. (This is not a state because of the finite trap and the Heisenberg uncertainty relation). The initial discoverers of BECs, Eric Cornell and Carl Wieman from Boulder and Wolfgang Ketterle from MIT, were awarded the 2001 Nobel prize in physics.

Low Temperature Equation of State of a Bose Gas

The pressure of the ideal Bose gas was computed in (3.111). We can express this in terms of our new favourite functions (3.116) as

| (3.121) |

Formally there is also a contribution from the ground state, but it is which is a factor of smaller than the term above and can be safely ignored. At low temperatures, , we have and

So at low temperatures, the equation of state of the ideal Bose gas is very different from the classical, high temperature, behaviour. The pressure scales as (recall that there is a factor of lurking in the ). More surprisingly, the pressure is independent of the density of particles .

3.5.4 Heat Capacity: Our First Look at a Phase Transition

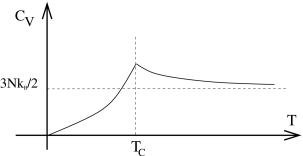

Let’s try to understand in more detail what happens as we pass through the critical temperature . We will focus on how the heat capacity behaves on either side of the critical temperature.

We’ve already seen in (3.121) that we can express the energy in terms of the function ,

so the heat capacity becomes

| (3.122) |

The first term gives a contribution both for and for . However, the second term includes a factor of and is a very peculiar function of temperature: for , it is fairly smooth, dropping off at . However, as , the fugacity rapidly levels off to the value . For , doesn’t change very much at all. The net result of this is that the second term only contributes when . Our goal here is to understand how this contribution behaves as we approach the critical temperature.

Let’s begin with the easy bit. Below the critical temperature, , only the first term in (3.122) contributes and we may happily set . This gives the heat capacity,

| (3.123) |

Now we turn to . Here we have , so . We also have . This means that the heat capacity decreases for . But we know that for , so the heat capacity must have a maximum at . Our goal in this section is to understand a little better what the function looks like in this region.

To compute the second term in (3.122) we need to understand both and how changes with as we approach from above. The first calculation is easy is we use our expression (3.117),

| (3.124) |

As from above, , a constant. All the subtleties lie in the remaining term, . After all, this is the quantity which is effectively vanishing for . What’s it doing at ? To figure this out is a little more involved. We start with our expression (3.115),

| (3.125) |

and we’ll ask what happens to the function as , keeping fixed. We know that exactly at , . But how does it approach this value? To answer this, it is actually simplest to look at the derivative , where

The reason for doing this is that diverges as and it is generally easier to isolate divergent parts of a function than some finite piece. Indeed, we can do this straightforwardly for by looking at the integral very close to , we can write

where, in the last line, we made the substution . So we learn that as , . But this is enough information to tell us how approaches its value at : it must be

for some constant . Inserting this into our equation (3.125) and rearranging, we find that as from above,

where, in the second line, we used the expression of the critical temperature (3.118). is some constant that we could figure out with a little more effort, but it won’t be important for our story. From the expression above, we can now determine as . We see that it vanishes linearly at .

Putting all this together, we can determine the expression for the heat capacity (3.122) when . We’re not interested in the coefficients, so we’ll package a bunch of numbers of order into a constant and the end result is

The first term above goes smoothly over to the expression (3.123) for when . But the second term is only present for . Notice that it goes to zero as , which ensures that the heat capacity is continuous at this point. But the derivative is not continuous. A sketch of the heat capacity is shown in the figure.

Functions in physics are usually nice and smooth. How did we end up with a discontinuity in the derivative? In fact, if we work at finite , strictly speaking everything is nice and smooth. There is a similar contribution to even at . We can see that by looking again at the expressions (3.119) and (3.120), which tell us

The difference is that while is of order one above , it is of order below . In the thermodynamic limit, , this results in the discontinuity that we saw above. This is a general lesson: phase transitions with their associated discontinuities can only arise in strictly infinite systems. There are no phase transitions in finite systems.

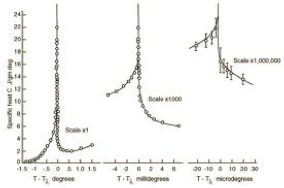

Superfluid Helium-4

A similar, but much more pronounced, discontinuity is seen in Helium-4 as it becomes a superfluid, a transition which occurs at 2.17 . The atom contains two protons, two neutrons and two electrons and is therefore a boson. (In contrast, Helium-3 contains just a single neutron and is a fermion). The experimental data for the heat capacity of Helium-4 is shown on the right. The successive graphs are zooming in on the phase transistion: the scales are (from left to right) Kelvin, milliKelvin and microKelvin. The discontinuity is often called the lambda transition on account of the shape of this graph.

There is a close connection between Bose-Einstein condensation described above and superfluids: strictly speaking a non-interacting Bose-Einstein condensate is not a superfluid but superfluidity is a consequence of arbitrarily weak repulsive interactions between the atoms. However, in He-4, the interactions between atoms are strong and the system cannot be described using the simple techniques developed above.

Something very similar to Bose condensation also occurs in superconductivity and superfluidity of Helium-3. Now the primary characters are fermions rather than bosons (electrons in the case of superconductivity). As we will see in the next section, fermions cannot condense. But they may form bound states due to interactions and these effective bosons can then undergo condensation.

3.6 Fermions

For our final topic, we will discuss the fermion gases. Our analysis will focus solely on non-interacting fermions. Yet this simple model provides a (surprisingly) good first approximation to a wide range of systems, including electrons in metals at low temperatures, liquid Helium-3 and white dwarfs and neutron stars.

Fermions are particles with -integer spin. By the spin-statistics theorem, the wavefunction of the system is required to pick up a minus sign under exchange of any particle,

As a corollory, the wavefunction vanishes if you attempt to put two identical fermions in the same place. This is a reflection of the Pauli exclusion principle which states that fermions cannot sit in the same state. We will see that the low-energy physics of a gas of fermions is entirely dominated by the exclusion principle.

We work again in the grand canonical ensemble. The grand partition function for a single state is very easy: the state is either occupied or it is not. There is no other option.

So, the grand partition function for all states is , from which we can compute the average number of particles in the system

where the average number of particles in the state is

| (3.126) |

This is the Fermi-Dirac distribution. It differs from the Bose-Einstein distribution only by the sign in the denominator. Note however that we had no convergence issues in defining the partition function. Correspondingly, the chemical potential can be either positive or negative for fermions.

3.6.1 Ideal Fermi Gas

We’ll look again at non-interacting, non-relativistic particles with . Since fermions necessarily have -integer spin, , there is always a degeneracy factor when counting the number of states given by

For example, electrons have spin and, correspondingly have a degeneracy of which just accounts for “spin up” and “spin down” states. We saw similar degeneracy factors when computing the density of states for photons (which have two polarizations) and phonons (which had three). For non-relativistic fermions, the density of states is

We’ll again use the notation of fugacity, . The particle number is

| (3.127) |

The average energy is

And the pressure is

| (3.128) |

At high temperatures, it is simple to repeat the steps of Section 3.5.2. (This is one of the questions on the problem sheet). Ony a few minus signs differ along the way and one again finds that for , the equation of state reduces to that of a classical gas,

| (3.129) |

Notice that the minus signs filter down to the final answer: the first quantum correction to a Fermi gas increases the pressure.

3.6.2 Degenerate Fermi Gas and the Fermi Surface

In the extreme limit , the Fermi-Dirac distribution becomes very simple: a state is either filled or empty.

It’s simple to see what’s going on here. Each fermion that we throw into the system settles into the lowest available energy state. These are successively filled until we run out of particles. The energy of the last filled state is called the Fermi energy and is denoted as . Mathematically, it is the value of the chemical potential at ,

| (3.130) |

Filling up energy states with fermions is just like throwing balls into a box. With one exception: the energy states of free particles are not localised in position space; they are localised in momentum space. This means that successive fermions sit in states with ever-increasing momentum. In this way, the fermions fill out a ball in momentum space. The momentum of the final fermion is called the Fermi momentum and is related to the Fermi energy in the usual way: . All states with wavevector are filled and are said to form the Fermi sea or Fermi sphere. Those states with lie on the edge of the Fermi sea. They are said to form the Fermi surface. The concept of a Fermi surface is extremely important in later applications to condensed matter physics.

We can derive an expression for the Fermi energy in terms of the number of particles in the system. To do this, we should appreciate that we’ve actually indulged in a slight abuse of notation when writing (3.130). In the grand canonical ensemble, and are independent variables: they’re not functions of each other! What this equation really means is that if we want to keep the average particle number in the system fixed (which we do) then as we vary we will have to vary to compensate. So a slightly clearer way of defining the Fermi energy is to write it directly in terms of the particle number

| (3.131) |

Or, inverting,

| (3.132) |

The Fermi energy sets the energy scale for the system. There is an equivalent temperature scale, . The high temperature expansion that resulted in the equation of state (3.129) is valid at temperatures . In contrast, temperatures are considered “low” temperatures for systems of fermions. Typically, these low temperatures do not have to be too low: for electrons in a metal, ; for electrons in a white dwarf, .

While is the energy of the last occupied state, the average energy of the system can be easily calculated. It is

| (3.133) |

Similarly, the pressure of the degenerate Fermi gas can be computed using (3.128),

| (3.134) |

Even at zero temperature, the gas has non-zero pressure, known as degeneracy pressure. It is a consequence of the Pauli exclusion principle and is important in the astrophysics of white dwarf stars and neutron stars. (We will describe this application in Section 3.6.5). The existence of this residual pressure at is in stark contrast to both the classical ideal gas (which, admittedly, isn’t valid at zero temperature) and the bosonic quantum gas.

3.6.3 The Fermi Gas at Low Temperature

We now turn to the low-temperature behaviour of the Fermi gas. As mentioned above, “low” means , which needn’t be particularly low in everyday terms. The number of particles and the average energy are given by,

| (3.135) |

and

| (3.136) |

where, for non-relativistic fermions, the density of states is

Our goal is to firstly understand how the chemical potential , or equivalently the fugacity , changes with temperature when is held fixed. From this we can determine how changes with temperature when is held fixed.

There are two ways to proceed. The first is a direct approach on the problem by Taylor expanding (3.135) and (3.136) for small . But it turns out that this is a little delicate because the starting point at involves the singular distribution shown on the left-hand side of Figure 22 and it’s easy for the physics to get lost in a morass of integrals. For this reason, we start by developing a heuristic understanding of how the integrals (3.135) and (3.136) behave at low temperatures which will be enough to derive the required results. We will then give the more rigorous expansion – sometimes called the Sommerfeld expansion – and confirm that our simpler derivation is indeed correct.

The Fermi-Dirac distribution (3.126) at small temperatures is sketched on the right-hand side of Figure 22. The key point is that only states with energy within of the Fermi surface are affected by the temperature. We want to insist that as we vary the temperature, the number of particles stays fixed which means that . I claim that this holds if, to leading order, the chemical potential is independent of temperature, so

| (3.137) |

Let’s see why this is the case. The change in particle number can be written as

There are two things going on in the step from the second line to the third. Firstly, we are making use of the fact that, for , the Fermi-Dirac distribution only changes significantly in the vicinity of as shown in the right-hand side of Figure 22. This means that the integral in the middle equation above is only receiving contributions in the vicinity of and we have used this fact to approximate the density of states with its value at . Secondly, we have used our claimed result (3.137) to replace the total derivative (which acts on the chemical potential) with the partial derivative (which doesn’t) and is replaced with its zero temperature value .

Explicitly differentiating the Fermi-Dirac distribution in the final line, we have

This integral vanishes because is odd around while the function is even. (And, as already mentioned, the integral only receives contributions in the vicinity of ).

Let’s now move on to compute the change in energy with temperature. This, of course, is the heat capacity. Employing the same approximations that we made above, we can write

However, this time we have not just replaced by , but instead Taylor expanded to include a term linear in . (The factor of comes about because . The first term in the square brackets vanishes by the same even/odd argument that we made before. But the term survives.

Writing , the integral becomes

where we’ve extended the range of the integral from to , safe in the knowledge that only the region near (or ) contributes anyway. More importantly, however, this integral only gives an overall coefficient which we won’t keep track of. The final result for the heat capacity is

There is a simple way to intuitively understand this linear behaviour. At low temperatures, only fermions within of are participating in the physics. The number of these particles is . If each picks up energy then the total energy of the system scales as , resulting in the linear heat capacity found above.

Finally, the heat capacity is often re-expressed in a slightly different form. Using (3.131) we learn that which allows us to write,

| (3.138) |

Heat Capacity of Metals

One very important place that we can apply the theory above it to metals. We can try to view the conduction electrons — those which are free to move through the lattice — as an ideal gas. At first glance, this seems very unlikely to work. This is because we are neglecting the Coulomb interaction between electrons. Nonetheless, the ideal gas approximation for electrons turns out to work remarkably well.

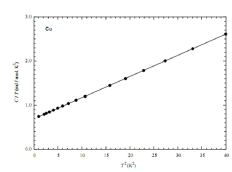

From what we’ve learned in this section, we would expect two contributions to the heat capacity of a metal. The vibrations of the lattice gives a phonon contribution which goes as (see equation (3.102)). If the conduction electrons act as an ideal gas, they should give a linear term. The low-temperature heat capacity can therefore be written as

Experimental data is usually plotted as vs since this gives a straight line which looks nice. The intercept with the axis tells us the electron contribution. The heat capacity for copper is shown in the figure.

We can get a rough idea of when the phonon and electron contributions to heat capacity are comparable. Equating (3.103) with (3.145), and writing , we have the the two contributions are equal when . Ballpark figures are and which tells us that we have to get down to temperatures of around or so to see the electron contribution.

For many metals, the coefficient of the linear heat capacity is fairly close to the ideal gas value (within, say, 20% or so). Yet the electrons in a metal are far from free: the Coulomb energy from their interations is at least as important as the kinetic energy. So why does the ideal gas approximation work so well? This was explained in the 1950s by Landau and the resulting theory — usually called Landau’s Fermi liquid theory — is the basis for our current understanding of electron systems.

3.6.4 A More Rigorous Approach: The Sommerfeld Expansion

The discussion in Section 3.6.3 uses some approximations but highlights the physics of the low-temperature fermi gas. For completeness, here we present a more rigorous derivation of the low-temperature expansion.

To start, we introduce some new notation for the particle number and energy integrals, (3.135) and (3.136). We write,

| (3.139) | |||||

and

| (3.140) | |||||

where is the familiar thermal wavelength and the functions are the fermionic analogs of defined in (3.116),

| (3.141) |

where it is useful to recall and (which follows, of course, from the property ). This function is an example of a polylogarithm and is sometimes written as .

Expanding

We will now derive the large expansion of , sometimes called the Sommerfeld expansion. The derivation is a little long. We begin by splitting the integral into two parts,

We now make a simple change of variable, for the first integral and for the second,

So far we have only massaged the integral into a useful form. We now make use of the approximation . Firstly, we note that the first integral has a suppression in the denominator. If we replace the upper limit of the integral by , the expression changes by a term of order which is a term we’re willing to neglect. With this approximation, we can combine the two integrals into one,

We now Taylor expand the numerator,

From which we get

We’re left with a fairly simple integral.

The integral in the last line is very simple: . We have only to do the sum. There are a number of ways to manipulate this. We chose to write

| (3.142) | |||||

This final sum is well known. It is . (The original proof of this, by Euler, proceeds by Taylor expanding , writing resulting sum as a product of roots, and then equating the term).

After all this work, we finally have the result we want. The low temperature expansion of is an expansion in ,

| (3.143) |

Back to Physics: Heat Capacity of a Fermi Gas Again

The expansion (3.143) is all we need to determine the leading order effects of temperature on the Fermi gas. The number of particles (3.139) can be expressed in terms of the chemical potential as

| (3.144) |

But the number of particles determines the Fermi energy through (3.132). For fixed particle number , we can therefore express the chemical potential at finite temperature in terms of the Fermi energy. After a small amount of algebra, we find

We see that the chemical potential is a maximum at and decreases at higher . This is to be expected: recall that by the time we get to the classical gas, the chemical potential must be negative. Moreover, note that the leading order correction is quadratic in temperature rather than linear, in agreement with our earlier claim (3.137).

The energy density can be computed from (3.140) and (3.143). It is

Our real interest is in the heat capacity. However, with fixed particle number , the chemical potential also varies with temperature at quadratic order. We can solve this problem by dividing by (3.144) to get

Now the only thing that depends on temperature on the right-hand side is itself. From this we learn that the heat capacity of a low temperature Fermi gas is linear in ,

| (3.145) |

But we now have the correct coefficient that completes our earlier result (3.138).

3.6.5 White Dwarfs and the Chandrasekhar limit

When stars exhaust their fuel, the temperature and they have to rely on the Pauli exclusion principle to support themselves through the degeneracy pressure (3.134). Such stars supported by electron degeneracy pressure are called white dwarfs. In addition to the kinetic energy of fermions given in (3.132) the system has gravitational energy. If we make the further assumption that the density in a star of radius is uniform, then

where is Newton’s constant. In the problem set, you will minimise to find the relationship between the radius and mass of the star,

This is unusual. Normally if you throw some stuff on a pile, the pile gets bigger. Not so for stars supported by degeneracy pressure. The increased gravitational attraction wins and causes them to shrink.

As the star shrinks, the Fermi energy (3.132) grows. Eventually it becomes comparable to the electron mass and our non-relativistic approximation breaks down. We can re-do our calculations using the relativistic formula for the density of states (3.93) (which needs to be multiplied by a factor of 2 to account for the spin degeneracy). In the opposite regime of ultra-relativistic electrons, where , then we can expand the density of states as

from which we can determine the kinetic energy due to fermions, replacing the non-relativistic result (3.133) by

The Fermi energy can be expressed in terms of the particle number by

The total energy of the system is then given by,

where is the proton mass (and we’re taking as a good approximation to the full mass of the star). If the term above is positive then the star once again settles into a minimum energy state, where the term balances the term that grows linearly in . But if the term is negative, we have a problem: the star is unstable to gravitational collapse and will shrink to smaller and smaller values of . This occurs when the mass exceeds the Chandrasekhar limit, . Neglecting factors of 2 and , this limit is

| (3.146) |

This is roughly times the mass of the Sun. Stars that exceed this bound will not end their life as white dwarfs. Their collapse may ultimately be stopped by degeneracy pressure of neutrons so that they form a neutron star. Or it may not be stopped at all in which case they form a black hole.

The factor in brackets in (3.146) is an interesting mix of fundamental constants associated to quantum theory, relativity and gravity. In this combination, they define a mass scale called the Planck mass,

When energy densities reach the Planck scale, quantum gravity effects are important meaning that we have to think of quantising spacetime itself. We haven’t quite reached this limit in white dwarf stars. (We’re shy by about 25 orders of magnitude or so!). Nonetheless the presence of the Planck scale in the Chandra limit is telling us that both quantum effects and gravitational effects are needed to understand white dwarf stars: the matter is quantum; the gravity is classical.

3.6.6 Pauli Paramagnetism

“With heavy heart, I have decided that Fermi-Dirac, not Einstein, is the correct statistics, and I have decided to write a short note on paramagnetism”

Wolfgang Pauli, in a letter to Schrödinger, reluctantly admitting that electrons are fermions

We now describe a gas of electrons in a background magnetic field . There are two effects that are important: the Lorentz force on the motion of electrons and the coupling of the electron spin to the magnetic field. We first discuss the spin coupling and postpone the Lorentz force for the next section.

An electron can have two spin states, “up” and “down”. We’ll denote the spin state by a discrete label: for spin up; for spin down. In a background magnetic field , the kinetic energy picks up an extra term

| (3.147) |

where is the Bohr magneton. (It has nothing to do with the chemical potential. Do not confuse them!)

Since spin up and spin down electrons have different energies, their occupation numbers differ. Equation (3.139) is now replaced by

Our interest is in computing the magnetization, which is a measure of how the energy of the system responds to a magnetic field,

| (3.148) |

Since each spin has energy (3.147), the magnetization is simply the difference in the in the number of up spins and down spins,

At high temperatures, and suitably low magnetic fields, we can approximate as , so that

We can eliminate the factor of in favour of the particle number,

From which we can determine the high temperature magnetization,

This is the same result that you computed in the first problem sheet using the simple two-state model. We see that, once again, at high temperatures the quantum statistics are irrelevant. The magnetic susceptibility is a measure of how easy it is to magnetise a substance. It is defined as

| (3.149) |

Evaluating in the limit of vanishing magnetic field, we find that the magnetization is inversely proportional to the temperature,

This behaviour is known as Curie’s law. (Pierre, not Marie).

The above results hold in the high temperature limit. In contrast, at low temperatures the effects of the Fermi surface become important. We need only keep the leading order term in (3.143) to find

where we’re written the final result in the limit and expanded to linear order in . We could again replace some of the factors for the electron number . However, we can emphasise the relevant physics slightly better if we write the result above in terms of the density of states (3.108). Including the factor of 2 for spin degeneracy, we have

| (3.150) |

We see that at low temperatures, the magnetic susceptibility no longer obeys Curie’s law, but saturates to a constant

What’s happening here is that most electrons are embedded deep within the Fermi surface and the Pauli exclusion principle forbids them from flipping their spins in response to a magnetic field. Only those electrons that sit on the Fermi surface itself — all of them — have the luxury to save energy by aligning their spins with the magnetic field.

Notice that . Such materials are called paramagnetic: in the presence of a magnetic field, the magnetism increases. Materials that are paramagnetic are attracted to applied magnetic fields. The effect described above is known as Pauli paramagnetism.

3.6.7 Landau Diamagnetism

For charged fermions, such as electrons, there is a second consequence of placing them in a magnetic field: they move in circles. The Hamiltonian describing a particle of charge in a magnetic field is,

This has an immediate consequence: a magnetic field cannot affect the statistical physics of a classical gas! This follows simply from substituting the above Hamiltonian into the classical partition function (2.49). A simple shift of variables, shows that the classical partition function does not depend on . This result (which was also mentioned in a slightly different way in the Classical Dynamics course) is known as the Bohr-van Leeuwen theorem: it states that there can be no classical magnetism.

Let’s see how quantum effects change this conclusion. Our first goal is to understand the one-particle states for an electron in a constant background magnetic field. This is a problem that you will also solve in the Applications of Quantum Mechanics course. It is the problem of Landau levels.

Landau Levels

We will consider an electron moving in a constant magnetic field pointing in the direction: . There are many different ways to pick such that gives the right magnetic field. These are known as gauge choices. Each choice will give rise to the same physics but, usually, very different equations in the intervening steps. Here we make the following gauge choice,

| (3.151) |

Let’s place our particle in a cubic box with sides of length . Solutions to the Schrödinger equation are simply plane waves in the and directions,

But the wavefunction must satisfy,

| (3.152) |

But this is a familiar equation! If we define the cyclotron frequency,

Then we can rewrite (3.152) as

where . This is simply the Schrödinger equation for a harmonic oscillator, jiggling with frequency and situated at position .

One might wonder why the harmonic oscillator appeared. The cyclotron frequency is the frequency at which electrons undergo classical Larmor orbits, so that kind of makes sense. But why have these become harmonic oscillators sitting at positions which depend on ? The answer is that there is no deep meaning to this at all! it is merely an artefact of our gauge choice (3.151). Had we picked a different giving rise to the same , we would find ourselves trying to answer a different question! The lesson here is that when dealing with gauge potentials, the intermediate steps of a calculation do not always have physical meaning. Only the end result is important. Let’s see what this is. The energy of the particle is

where is the energy of the harmonic oscillator,

These discrete energy levels are known as Landau levels. They are highly degenerate. To see this, note that is quantized in unit of . This means that we can have a harmonic oscillator located every . The number of such oscillators that we can fit into the box of size is . This is the degeneracy of each level,

where is the total flux through the system and is known as the flux quantum. (Note that the result above does not include a factor of 2 for the spin degeneracy of the electron).

Back to the Diamagnetic Story

We can now compute the grand partition function for non-interacting electrons in a magnetic field. Including the factor of 2 from electron spin, we have

To perform the sum, we use the Euler summation formula which states that for any function ,

We’ll apply the Euler summation formula to the function,

So the grand partition function becomes

| (3.153) | |||||

The first term above does not depend on . In fact it is simply a rather perverse way of writing the partition function of a Fermi gas when . However, our interest here is in the magnetization which is again defined as (3.148). In the grand canonical ensemble, this is simply

The second term in (3.153) is proportional to (which is hiding in the term). Higher order terms in the Euler summation formula will carry higher powers of and, for small , the expression above will suffice.

At , the integrand is simply for and zero otherwise. To compare to Pauli paramagnetism, we express the final result in terms of the Bohr magneton . We find

This is comparable to the Pauli contribution (3.150). But it has opposite sign. Substances whose magnetization is opposite to the magnetic field are called diamagnetic: they are repelled by applied magnetic fields. The effect that we have derived above is known as Landau diamagnetism.