2 My First Path Integral

It’s now time to understand a little better how to deal with the path integral

| (2.34) |

Our strategy – at least for now – will be to work in situations where the saddle point dominates, with the integral giving small corrections to this result. In this regime, we can think of the integral as describing the thermal fluctuations of the order parameter around the equilibrium configuration determined by the saddle point. As we will see, this approach fails to work at the critical point, which is the regime we’re most interested in. We will then have to search for other techniques, which we will describe in Section 3.

Preparing the Scene

Before we get going, we’re going to change notation. First, we will change the name of our main character and write the magnetisation as

If you want a reason for this, I could tell you that the change of name is in deference to universality and the fact that the field could describe many things, not just magnetisation. But the real reason is simply that fields in path integrals should have names like . (This is especially true in quantum field theory where is reserved for the mass of the particle.)

We start by setting ; we’ll turn back on in Section 2.2. The free energy is then

Roughly speaking, path integrals are trivial to do if is quadratic in , and possible to do if the higher order terms in give small corrections. If the higher order terms in are important, then the path integral is typically impossible without the use of numerics. Here we’ll start with the trivial, building up to the “possible” in later chapters.

To this end, throughout the rest of this chapter we will restrict attention to a free energy that contains no terms higher than quadratic in . We have to be a little careful about whether we sit above or below the critical temperature. When , things are easy and we simply throw away all higher terms and work with

| (2.35) |

where is positive.

A word of caution. We are ignoring the quartic terms purely on grounds of expediency: this makes our life simple. However, these terms become increasingly important as and we approach the critical point. This means that nothing we are about to do can be trusted near the the critical point. Nonetheless, we will be able to extract some useful intuition from what follows. We will then be well armed to study critical phenomena in Section 3.

When , and , we need to do a little more work. Now the saddle point does not lie at , but rather at given in (1.31). In particular, it’s crucial that we keep the quartic term because this rescues the field from the upturned potential it feels at the origin.

However, it’s straightforward to take this into account. We simply compute the path integral about the appropriate minimum by writing

| (2.36) |

Substituting this into the free energy gives

| (2.37) |

where now the include terms of order and and higher, all of which we’ve truncated. Importantly, there are no terms linear in . In fact, this was guaranteed to happen: the vanishing of the linear terms is equivalent to the requirement that the equation of motion (1.30) is obeyed. The new quadratic coefficient is

| (2.38) |

In particular, .

Practically, this means that we take the calculations that we do at with the free energy (2.35) and trivially translate them into calculations at . We just need to remember that we’re working with a shifted field, and that we should take . Once again, the same caveats hold: our calculations should not be trusted near the critical point .

2.1 The Thermodynamic Free Energy Revisited

For our first application of the path integral, we will compute something straightforward and a little bit boring: the thermodynamic free energy. There will be a little insight to be had from the result of this, although the main purpose of going through these steps is to prepare us for what’s to come.

We’ve already found some contributions to the thermodynamic free energy. There is the constant term and, if we’re working at , the additional contribution in (2.37). Here we are interested in further contributions to , coming from fluctuations of the field. To keep the formulae simple, we will ignore these two earlier contributions; you can always put them back in if you please.

Throughout this calculation, we’ll set so we’re working with the free energy (2.35). There is a simple trick to compute the partition function when the free energy is quadratic: we work in Fourier space. We write the Fourier transform of the magnetisation field as

Since our original field is real, the Fourier modes obeys .

The are wavevectors. Following the terminology of quantum mechanics, we will refer to as the momentum. At this stage, we should remember something about our roots. Although we’re thinking of as a continuous field, ultimately it arose from a lattice and so can’t vary on very small distance scales. This means that the Fourier modes must all vanish for suitably high momentum,

Here is called the ultra-violet (UV) cut-off. In the present case, we can think of , with the distance between the boxes that we used to coarse grain when first defining .

We can recover our original field by the inverse Fourier transform. It’s useful to have two different scenarios in mind. In the first, we place the system in a finite spatial volume . In this case, the momenta take discrete values,

| (2.39) |

and the inverse Fourier transform is

| (2.40) |

Alternatively, if we send , the sum over modes becomes an integral and we have

| (2.41) |

In what follows, we’ll jump backwards and forwards between these two forms. Ultimately, we will favour the integral form. But there are times when it will be simpler to think of the system in a finite volume as it will make us less queasy about some of the formulae we’ll encounter.

For now, let’s work with the form (2.41). We substitute this into the free energy to find

The integral over is now straightforward and gives us a delta function

and the free energy takes the simple form

| (2.42) |

Now we can see the benefit of working in Fourier space: at quadratic order, the free energy decomposes into individual modes. This is because the Fourier basis is the eigenbasis of , allowing us to diagonalise this operator.

To perform the functional integral, we also need to change the measure. Recall that the path integral was originally an integral over for each value of labelling the position of a box. Now it is an integral over all Fourier modes, which we write as

| (2.43) |

where we should remember that because is real. I’ve included a normalisation constant in this measure. I’ll make no attempt to calculate this and, ultimately, it won’t play any role because, having computed the partition function, the first thing we do is take the log and differentiate. At this point, will drop out. In later formulae, we’ll simply ignore it. But it’s worth keeping it in for now.

Our path integral is now

If this still looks daunting, we just need to recall that in finite volume, the integral in the exponent is really a sum over discrete momentum values,

Note that the placement of brackets shifted in the two lines, because the sum in the exponent got absorbed into the overall product. If this is confusing, it might be worth comparing what we’ve done above to a simple integral of the form .

We’re left with something very straightforward: it’s simply a bunch of decoupled Gaussian integrals, one for each value of . Recall that Gaussian integral over a single variable is given by

| (2.44) |

Applying this for each , we have our expression for the path integral

where the square root is there, despite the fact that we’re integrating over complex , because is not an independent variable. Note that we have a product over all . In finite volume, where the possible are discrete, there’s nothing fishy going on. But as we go to infinite volume, this will become a product over a continuous variable .

We can now compute the contribution of these thermal fluctuations to the thermodynamic free energy. The free energy per unit volume is given by or,

We can now revert back to the integral over , rather than the sum by writing

This final equation might make you uneasy since there an explicit factor of the volume remains in the argument, but we’ve sent to convert from to . At this point, the normalisation factor will ride to the rescue. However, as advertised previously, none of these issues are particularly important since they drop out when we compute physical quantities. Let’s look at the simplest example.

2.1.1 The Heat Capacity

Our real interest lies in the heat capacity per unit volume, . Specifically, we would like to understand the temperature dependence of the heat capacity. This is given by (1.15),

The derivatives hit both the factor of in the numerator, and any dependence in the coefficients and . For simplicity, let’s work at . We’ll take constant and . A little bit of algebra shows that the contribution to the heat capacity from the fluctuations is given by

| (2.45) |

The first of these terms has a straightforward interpretation: it is the usual “” per degree of freedom that we expect from equipartition, albeit with . (This can be traced to the original in .)

The other two terms come from the temperature dependence in . What happens next depends on the dimension . Let’s look at the middle term, proportional to

For , this integral diverges as we remove the UV cut-off . In contrast, when it is finite as . When it is finite, we can easily determine the leading order temperature dependence of the integral by rescaling variables. We learn that

| (2.46) |

When , the term should be replaced by a logarithm. Similarly, the final term in (2.45) is proportional to

again, with a logarithm when .

What should we take from this? When , the leading contribution to the heat capacity involves a temperature independent constant, , albeit a large one. This constant will be the same on both sides of the transition. (The heat capacity itself is not quite temperature independent as it comes with the factor of from the numerator of (2.45), but this doesn’t do anything particularly dramatic.) In contrast, when , the leading order contribution to the heat capacity is proportional to . And, this leads to something more interesting.

To see this interesting behaviour, we have to do something naughty. Remember that our calculation above isn’t valid near the critical point, , because we’ve ignored the quartic term in the free energy. Suppose, however, that we throw caution to the wind and apply our result here anyway. We learn that, for , the heat capacity diverges at the critical point. The leading order behaviour is

| (2.47) |

This is to be contrasted with our mean field result which gives .

As we’ve stressed, we can’t trust the result (2.47). And, indeed, this is not the right answer for the critical exponent. But it does give us some sense for how the mean field results can be changed by the path integral. It also gives a hint for why the critical exponents are not affected when , which is the upper critical dimension.

2.2 Correlation Functions

The essential ingredient of Landau-Ginzburg theory – one that was lacking in the earlier Landau approach – is the existence of spatial structure. With the local order parameter , we can start to answer questions about how the magnetisation varies from point to point.

Such spatial variations exist even in the ground state of the system. Mean field theory – which is synonymous with the saddle point of the path integral – tells us that the expectation value of the magnetisation is constant in the ground state

| (2.48) |

This makes us think of the ground state as a calm fluid, like the Cambridge mill pond when the tourists are out of town. This is misleading. The ground state is not a single field configuration but, as always in statistical mechanics, a sum over many possible configurations in the thermal ensemble. This is what the path integral does for us. The importance of these other configurations will determine whether the ground state is likely to contain only gentle ripples around the background (2.48), or fluctuations so wild that it makes little sense to talk about an underlying background at all.

These kind of spatial fluctuations of the ground state are captured by correlation functions. The simplest is the two-point function , computed using the probability distribution (1.26). This tells us how the magnetisation at point is correlated with the magnetisation at . If, for example, there is an unusually large fluctuation at , what will the magnitude of the field most likely be at ?

Because takes different values above and below the transition, it is often more useful to compute the connected correlation function,

| (2.49) |

If you’re statistically inclined, this is sometimes called a cumulant of the random variable .

The path integral provides a particularly nice way to compute connected correlation functions of this kind. We consider the system in the presence of a magnetic field , but now allow to also vary in space. We take the free energy to be

| (2.50) |

We can now think of the partition function as a functional of .

For what it’s worth, is related to the Legendre transform of .

Now that depends on the function it is a much richer and more complicated object. Indeed, it encodes all the information about the fluctuations of the theory. Consider, for example, the functional derivative of ,

Here I’ve put a subscript on to remind us that this is the expectation value computed in the presence of the magnetic field . If our real interest is in what happens as we approach the critical point, we can simply set .

Similarly, if we can take two derivatives of . Now when the second derivative hits, it can either act on the exponent , or on the factor in front. The upshot is that we get

or

which is precisely the connected correlation function (2.49). In what follows, we’ll mostly work above the critical temperature so that . In this case, we set to find

| (2.51) |

All that’s left is for us to compute the path integral .

2.2.1 The Gaussian Path Integral

As in our calculation of the thermodynamic free energy, we work in Fourier space. The free energy is now a generalisation of (2.42),

where are the Fourier modes of . To proceed, we complete the square, and define the shifted magnetisation

We can then write the free energy as

Our path integral is

where we’ve shifted the integration variable from to ; there is no Jacobian penalty for doing this. We’ve also dropped the normalisation constant that we included in our previous measure (2.43) on the grounds that it clutters equations and does nothing useful.

The path integral now gives

The first term is just the contribution we saw before. It does not depend on the magnetic field and won’t contribute to the correlation function. (Specifically, it will drop out when we differentiate .) The interesting piece is the dependence on the Fourier modes . To get back to real space , we simply need to do an inverse Fourier transform. We have

| (2.52) |

where

| (2.53) |

We’re getting there. Differentiating the partition function as in (2.51), we learn that the connected two-point function is

| (2.54) |

We just need to do the integral (2.53).

Computing the Fourier Integral

To start, note that the integral is rotationally invariant, and so with . We write the integral as

where we’ve introduced a length scale

| (2.55) |

This is called the correlation length and it will prove to be important as we move forwards. We’ll discuss it more in Section 2.2.3.

To proceed, we use a trick. We can write

Using this, we have

| (2.56) | |||||

where, in going to the last line, we’ve simply done the Gaussian integrals over . At this point there are a number of different routes. We could invoke some specialfunctionology and note that we can massage the integral into the form of a Bessel function

whose properties you can find in some dog-eared mathematical methods textbook. However, our interest is only in the behaviour of in various limits, and for this purpose it will suffice to perform the integral (2.56) using a saddle point. We ignore overall constants, and write the integral as

The saddle point sits at . We then approximate the integral as

For us the saddle lies at

There are two different limits that we are interested in: and . We’ll deal with them in turn:

: In this regime, we have . And so . One can also check that . The upshot is that the asymptotic form of the integral scales as

At large distance scales, the correlation function falls off exponentially.

: In the other regime, the saddle point lies at , giving and . Putting this together, we see that for , the fall-off is only power law at short distances,

We learn that the correlation function changes its form at the distances scale , with the limiting form

| (2.57) |

This is known as the Ornstein-Zernicke correlation.

2.2.2 The Correlation Function is a Green’s Function

The result (2.57) is important and we we’ll delve a little deeper into it shortly. But first, it will prove useful to redo the calculation above in real space, rather than Fourier space, to highlight some of the machinery hiding behind our path integral.

To set some foundations, we start with a multi-dimensional integral over variables. Suppose that is an -dimensional vector. The simple Gaussian integral now involves an invertible matrix ,

This result follows straighforwardly from the single-variable Gaussian integral (2.44), by using a basis that diagonalises . Similarly, if we introduce an -dimensional vector , we can complete the square to find

| (2.58) |

Now let’s jump to the infinite dimensional, path integral version of this. Throughout this section, we’ve been working with a quadratic free energy

| (2.59) |

We can massage this into the form of the exponent in (2.58) by writing

where we’ve introduced the “infinite dimensional matrix”, more commonly known as an operator

| (2.60) |

Note that this is the operator that appears in the saddle point evaluation of the free energy, as we saw earlier in (1.30).

Given the operator , what is the inverse operator ? We have another name for the inverse of an operator: it is called a Green’s functions. In the present case, obeys the equation

By translational symmetry, we have . You can simply check that the Green’s function is indeed given in Fourier space by our previous result (2.53)

This route led us to the same result we had previously. Except we learn something new: the correlation function is the same thing as the Green’s function, , and hence solves,

This is telling us that if we perturb the system at the origin then, for a free energy of the quadratic form (2.59), the correlator responds by solving the original saddle point equation.

There is one further avatar of the correlation function that is worth mentioning: it is related to the susceptibility. Recall that previously we defined the susceptibility in (1.19) as . Now, we have a more refined version of susceptibility which knows about the spatial structure,

But, from our discussion above, this is exactly the correlation function . We can recover our original, coarse grained susceptibility as

| (2.61) |

The two point correlation function will play an increasingly important role in later calculations. For this reason it is given its own name: it is called the propagator. Propagators of this kind also arose in the lectures on Quantum Field Theory. In that case, the propagator was defined for a theory in Minkowski space, which led to an ambiguity (of integration contour) and a choice of different propagators: advanced, retarded or Feynman. In the context of Statistical Field Theory, we are working in Euclidean space and there is no such ambiguity.

2.2.3 The Correlation Length

Let’s now look a little more closely at the expression (2.57) for the correlation function which, in two different regimes, scales as

| (2.62) |

where . The exponent contains a length scale, , called the correlation length, defined in terms of the parameters in the free energy as .

We see from (2.62) that all correlations die off quickly at distances . In contrast, for there is only a much slower, power-law fall-off. In this sense, provides a characteristic length scale for the fluctuations. In a given thermal ensemble, there will be patches where the magnetisation is slightly higher, or slightly lower than the average . The size of these patches will be no larger than .

Recall that, close to the critical point, . This means that as we approach , the correlation length diverges as

| (2.63) |

This is telling us that system will undergo fluctuations of arbitrarily large size. This is the essence of a second order phase transition, and as we move forward we will try to better understand these fluctuations.

Numerical Simulations of the Ising Model

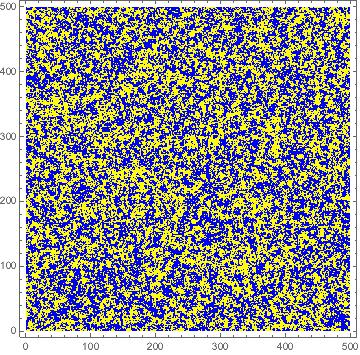

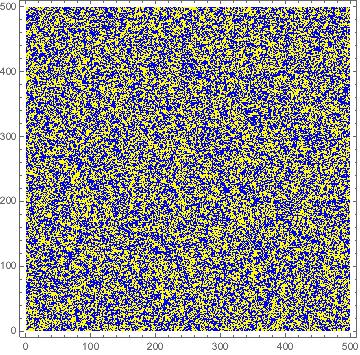

It’s useful to get a sense for what these fluctuations look like. We start in the disordered phase with . In the figures you can see two typical configurations that contribute to the partition function of the Ising model55 5 These images were generated by the Metropolis algorithm using a mathematica programme created by Daniel Schroeder. It’s well worth playing with to get a feel for what’s going on. Ising simulations, in various formats, can be found on his webpage http://physics.weber.edu/thermal/computer.html.. The up spins are shown in yellow, the down spins in blue.

|

|

On the left, the temperature is , while on the right the temperature is . In both pictures, the spins look random. And yet, you can see by eye that there is something different between the two pictures; on the right, when the temperature is higher, the spins are more finely intertwined, with a yellow spin likely to have a blue dot sitting right next to it. Meanwhile, on the left, the randomness is coarser.

What you’re seeing here is the correlation length at work. In each picture, sets the typical length scale of fluctuations. In the right-hand picture, where the temperature is higher, the correlation length is smaller.

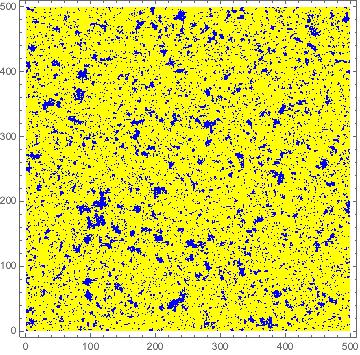

There is a similar story in the ordered phase, with . Once again, we show two configurations below. Now the system must choose between one of the two ground states; here the choice is that the yellow, up spins are dominant. The left-hand configuration has temperature , and the right-hand configuration temperature . We see that sizes of the fluctuations around the ordered phase become smaller the further we sit from the critical point.

|

|

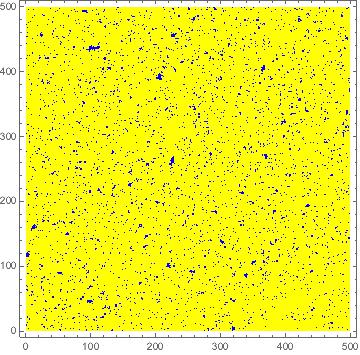

Finally, we can ask what happens when we sit at the critical point . A typical configuration is shown in Figure 20. Although it may not be obvious, there is now no characteristic length scale in the picture. Instead, fluctuations occur on all length scales, big and small66 6 The best demonstration that I’ve seen of this scale invariance at the critical point is this Youtube video by Douglas Ashton.. This is the meaning of the diverging correlation length .

Critical Opalescence

There is a nice experimental realisation of these large fluctuations, which can be seen in liquid-gas transitions or mixing transitions between two different fluids. (Both of these lie in the same universality class as the Ising model.) As we approach the second order phase transition, transparent fluids become cloudy, an effect known as critical opalescence77 7 You can see a number of videos showing critical opalescence on Youtube. For example, here.. What’s happening is that the size of the density fluctuations is becoming larger and larger, until they begin to scatter visible light.

More Critical Exponents

We saw in previous sections that we get a number of power-laws at critical points, each of which is related to a critical exponent. The results above give us two further exponents to add to our list. First, we have a correlation length which diverges at the critical point with power (2.63)

Similarly, we know that the correlation function itself is a power law at the critical point, with exponent

Each of these can be thought of as a mean field prediction, in the sense that we are treating the path integral only in quadratic order, which neglects important effects near the critical point. Given our previous discussion, it may not come as a surprise to learn that these critical exponents are correct when . However, they are not correct in lower dimensions. Instead one finds

| MF | |||

|---|---|---|---|

| 0.0363 | |||

| 0.6300 |

This gives us another challenge, one we will rise to in Section 3.

2.2.4 The Upper Critical Dimension

We’re finally in a position to understand why the mean field results hold in high dimensions, but are incorrect in low dimensions. Recall our story so far: when , the saddle point suggests that

Meanwhile, there are fluctuations around this mean field value described, at long distances, by the correlation function (2.62). In order to trust our calculations, these fluctuations should be smaller than the background around which they’re fluctuating. In other words, we require .

It’s straightforward to get an estimate for this. We know that the fluctuations decay after a distance . We can gain a measure of their importance if we integrate over a ball of radius . We’re then interested in the ratio

In order to trust mean field theory, we require that this ratio is much less than one. This is the Ginzburg criterion. We can anticipate trouble as we approach a critical point, for here diverges and vanishes. According to mean field theory, these two quantities scale as

results which can be found, respectively, in (1.31) and (2.63). This means that the ratio scales as

We learn that, as we approach the critical point, mean field – which, in this context, means computing small fluctuations around the saddle point – appears trustworthy only if

This is the upper critical dimension for the Ising model. Actually, at the critical dimension there is a logarithmic divergence in and so we have to treat this case separately; we’ll be more careful about this in the Section 3.

For dimensions , mean field theory predicts its own demise. We’ll see how to make progress in Section 3.

2.3 The Analogy with Quantum Field Theory

There is a very close analogy between the kinds of field theories we’re looking at here, and those that arise in quantum field theory. This analogy is seen most clearly in Feynman’s path integral approach to quantum mechanics88 8 You will see this in next term’s Advanced Quantum Field Theory course.. Correlation functions in both statistical and quantum field theories are captured by partition functions

| Statistical Field Theory: | ||||

| Quantum Field Theory: |

You don’t need to be a visionary to see the similarities. But there are also some differences: the statistical path integral describes a system in spatial dimensions, while the quantum path integral describes a system in spacetime dimensions, or spatial dimensions.

The factor of in the exponent of the quantum path integral can be traced to the fact that it describes a system evolving in time, and means that it has more subtle convergence properties than its statistical counterpart. In practice, to compute anything in the quantum partition function, one tends to rotate the integration contour and work with Euclidean time,

| (2.64) |

This is known as a Wick rotation. After this, the quantum and statistical partition functions are mathematically the same kind of objects

where is the Euclidean action, and is analogous to the free energy in statistical mechanics. If the original action was Lorentz invariant, then the Euclidean action will be rotationally invariant. Suddenly, the dimensional field theories, which seemed so unrealistic in the statistical mechanics context, take on a new significance.

By this stage, the only difference between the two theories is the words we drape around them. In statistical mechanics, the path integral captures the thermal fluctuations of the local order parameter, with a strength controlled by the temperature ; in quantum field theory the path integral captures the quantum fluctuations of the field , with a strength controlled by . This means that many of the techniques we will develop in this course can be translated directly to quantum field theory and high energy physics. Moreover, as we will see in the next section, much of the terminology has its roots in the applications to quantum field theory.

Note that the second order phase transition occurs in our theory when the coefficient of the quadratic term, vanishes: . From the perspective of quantum field theory, a second order phase transition describes massless particles.

Given that the similarities are so striking, one could ask if there are any differences between statistical and quantum field theories. The answer is yes: there are some quantum field theories which, upon Wick rotation, do not have real Euclidean actions. Perhaps the simplest example is Maxwell (or Yang-Mills) theory, with the addition of a “theta term”, proportional to . This gives rise to subtle effects in the quantum theory. However, because it contains a single time derivative, it becomes imaginary in the variable (2.64) and, correspondingly, there is no interpretation of as probabilities in a thermal ensemble.

A Different Perspective on the Lower Critical Dimension

A statistical field theory in spatial dimensions is related to quantum field theory in spatial dimensions. But we have a name for this: we call it quantum mechanics.

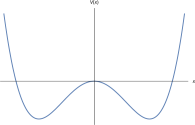

Viewed in this way, the lower critical dimension becomes something very familiar. Consider the quartic potential shown in the figure. Classical considerations suggest that there are two ground states, one for each of the minima. But we know that this is not the way things work in quantum mechanics. Instead, there is a unique ground state in which the wavefunction has support in both minima, but with the expectation value . Indeed, the domain wall calculation that we described in Section 1.3.3 is the same calculation that one uses to describe quantum tunnelling using the path integral.

Dressed in fancy language, we could say that quantum tunnelling means that the symmetry cannot be spontaneously broken in quantum mechanics. This translates to the statement that there are no phase transitions in statistical field theories.